Interactive registration for 3D data fusion

| Resource Type | Date |

|---|---|

| Demo | 2016-07-12 |

Description

The results presented in this page are related to the GPI Smart Room.

Some recents results have been developed in the context of the PhD thesis of (2018), “Semantic and Generic Object Segmentation for Scene Analysis Using RGB-D Data”, and the Bachelor thesis of Arantxa Casanova (2016), "Fusion of 3D data for interactive applications" later presented at , “Interactive Registration Method for 3D data Fusion”, in IC3D, Liège (Belgium) 2016.

Previous results come from CHIL and FascinatE Projects, or former PhD researchers thesis such as:

(2013), “Human body analysis using depth data”

(2012), “Articulated Models for Human Motion Analysis”

(2011), “Surface Reconstruction for Multi-View Video”

(2009), “Human Motion Capture with Scalable Body Models”

(2008), “A Unified Framework for Consistent 2D/3D Foreground Object Detection”

Telepresence and data fusion:

One way to overcome some of the inherent limitations of commercial depth sensors is by capturing the same scene with several depth sensors and merging their data, i.e. by performing 3D data fusion. This allows enlarging the interaction area or improving the precision of the detection, but requires the registration of point clouds from different sensors.

Arantxa Casanova won the Award to the Best Final Year Project in Spain in Information and Communications Technologies (2nd edition, UPF, award video) her Bachelor thesis. In her work, she implemented registration algorithms aimed to enlarge the detection area in interactive applications by the fusion of 3D data from two sensors. In particular, camera calibration from a geometrical pattern and auto-registration using the detection of a human finger as a reference. The finger detector method is more user-friendly and does not require external objects, while the elapsed time and the registration error are similar to those obtained through the classical camera calibration, as shown in the procedure in Figure 1.

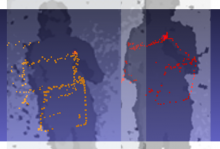

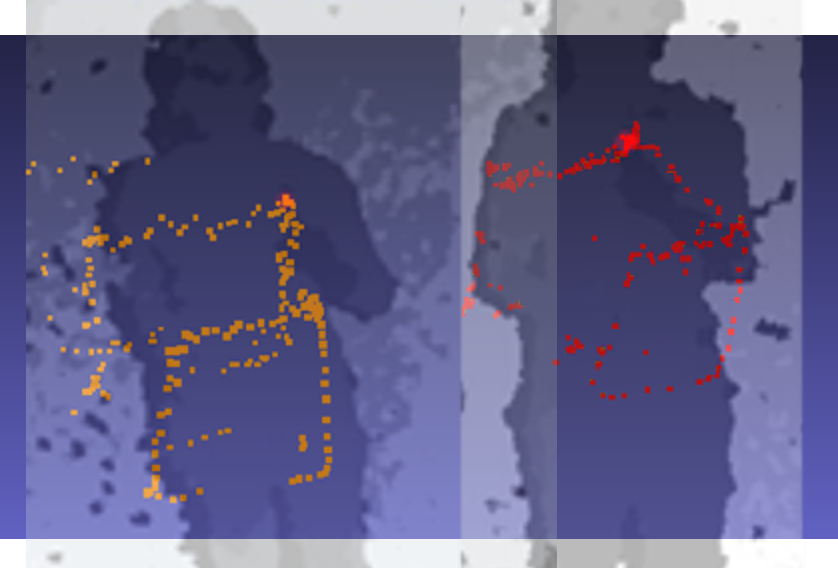

Figure 1. Finger calibration procedure: First, the user describes a geometrical shape (a 3D cube) with the finger.

The finger is tracked in the two sensors (in orange and red in the left images from both sensors).

Then the orange points are registered (rotated/translated) and shown in green over the red points of the second sensor.

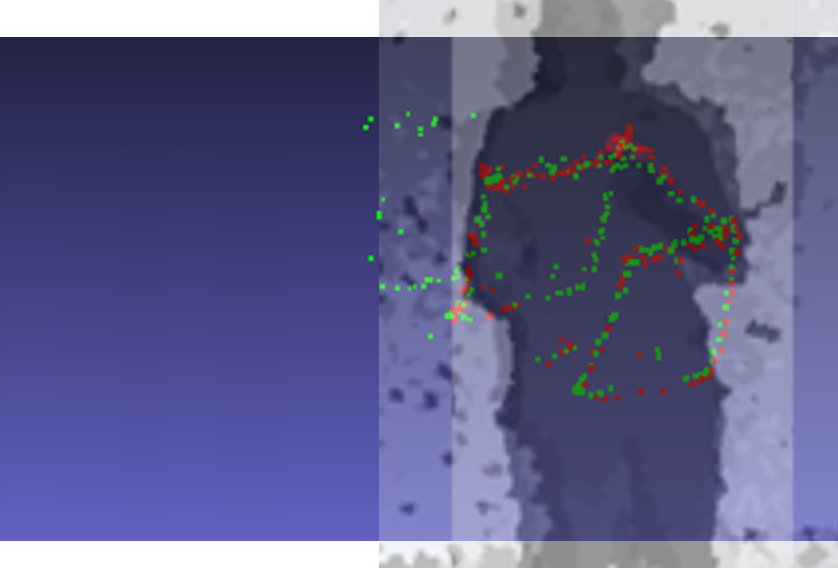

In the IC3D paper that followed, the application to an interactive scenario is extended, proposing a new interactive, fast and user-friendly method for depth sensor registration. A a detector provided by Exipple Studio (gestoos) tracks a hand through the detection area of a single depth sensor. The registration proposed allows to extend the detection area by 78.5% at 120cm from the sensors. The video in Figure 2 shows one possible application for hand drawing in an extended detection area of two kinect sensors.

Figure 2. Hand tracking application using two registered sensors. Points in green are detected by sensor 1, points in green by sensor 2.

This work has been one of the foundations of the 3D Telepresence project at GPI, which exploits the registration of multiple depth sensors to generate a virtual 3D view of the correspondant in a 3D Teleconference application with Virtual Reality Glasses. The image below shows a snapshot of the registered pointclouds of 4 kinect sensors.

Figure 3. Snapshot of the 3D Telepresence application, with 4 registered depth sensors

Related projects:

Free viewpoint video (FVV) from multiview data

Free viewpoint video (FVV) from multiview data

Temporally Coherent 3D Point Cloud Video Segmentation in Generic Scenes

Temporally Coherent 3D Point Cloud Video Segmentation in Generic Scenes

![]() 3D Point Cloud Segmentation using a Fully Connected Conditional Random Field

3D Point Cloud Segmentation using a Fully Connected Conditional Random Field

People involved

| Alba Pujol | PhD Candidate |

| Javier Ruiz Hidalgo | Associate Professor |

| Josep R. Casas | Associate Professor |

| Xiao Lin | PhD Candidate |

| Javier Ruiz Hidalgo | Associate Professor |

| Montse Pardàs | Professor |