Cross-modal Deep Learning between Vision, Language, Audio and Speech

| Type | Start | End |

|---|---|---|

| European | Oct 2018 | Sep 2021 |

| Responsible | URL |

|---|---|

| Xavier Giro-i-Nieto | Doctorate INPhINIT Fellowships |

Reference

INPhINIT "la Caixa" Fellowship Programme 2018

Description

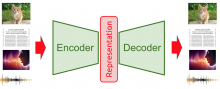

Deep neural networks have boosted the convergence of multimedia data analytics in a unified framework shared by practitioners in natural language, vision, audio and speech. Image captioning, lip reading or video sonorization are some of the first applications of a new and exciting field of research exploiting the generalisation properties of deep learning. This project aims at exploring deep neural network architectures capable of projecting any multimedia signal in a joint embedding space based on its shared semantics. This way, an image of a dog would be projected in a similar representation of an audio snippet of a dog barking, the natural language text “a dog barking”, or a speech excerpt of a human reading this sentence aloud.

The joint multimedia embedding space will be used to address two basic applications with a common core technology in terms of machine learning:

a) Cross-modal retrieval:

Given a data sample characterized by one or more modalities, match them with the most similar data sample from other modalities from a catalogue. This would be the case, for example, of a visual search problem where a picture from a food dish is match with the recipe followed to cook it, or viceversa.

b) Cross-modal generation:

Given a data sample characterized by one or more modalities, generate a new signal in another modality matching it. This would be the case of a lip-reading application, where a video stream of a person speaking is used to generate a text or speech signal that may match the spoken words. Another option would be generating an image or scheme based on an oral description of it. Generative models will rely on training schemes such as Variational AutoEncoders (VAE) and/or Generative Adversarial Networks (GANs).

Publications

|

. Cross-modal Neural Sign Language Translation. In: Proceedings of the 27th ACM International Conference on Multimedia - Doctoral Symposium. Proceedings of the 27th ACM International Conference on Multimedia - Doctoral Symposium. Nice, France: ACM; 2019. |

|

. Wav2Pix: Speech-conditioned Face Generation using Generative Adversarial Networks. In: ICASSP. ICASSP. Brighton, UK: IEEE; 2019. |

|

. Cross-modal Embeddings for Video and Audio Retrieval. In: ECCV 2018 Women in Computer Vision Workshop. ECCV 2018 Women in Computer Vision Workshop. Munich, Germany: Springer; 2018. |

Collaborators

| Amanda Duarte | PhD Candidate | amanda.duarte@upc.edu |

| Amaia Salvador | PhD Candidate | amaia.salvador@upc.edu |