Multi-view/Multi-sensor scene capture, analysis and representation

| Type | Start | End |

|---|---|---|

| Internal | Mar 2004 | Nov 2015 |

| Responsible | URL |

|---|---|

| Josep R. Casas |

Description

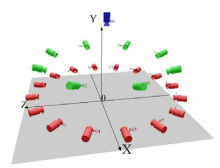

Multi-view video streams acquired in multiple camera settings should be conveniently processed for efficient content analysis and representation, and for enhanced support of 3DTV/FVV functionalities. The management of the richer available data can be done either in an image-based representation, by exploiting stereoscopic clues and epipolar constraints, or in directly in 3D space, after using a specific modeling (voxels, point-clouds, meshes, articulated models) for 3D scene reconstruction from the image data.

The first case, called “Multi-view stereo” for image-based analysis, coding or rendering (IBR), is the straightforward approach followed in most narrow-baseline camera settings where a few cameras are used. This approach allows easy FVV navigation by interpolating views along the base line, but has limited use out of the close neighborhood of the acquisition viewpoints. In addition, image-based scenarios can render intractable computational costs when a growing number of input viewpoints is considered.

An alternative approach in richer multi-view scenarios is reconstructing the 3D shapes of elements in the scene. Techniques available include the fusion of depth maps in Multi-view stereo or the computation Visual Hulls by Shape from Silhouette, Photo Hulls by Space Carving and Scene Flows by 3D motion estimation. Most of these techniques usually require the separation of foreground and background elements in the scene. 3D reconstruction methods provide a representation with a 3D support that can be used to explain in a single, compact structure, the multi-view cues from the input images with an arbitrary level of detail. Whereas in image-based multi-view analysis scene features are obtained directly from the pixels of images and applying epipolar constraints between features detected in different views, the final goal of 3D analysis must be to obtain representative features of a scene directly from 3D data.

A significant challenge for the future of 3DTV can be set as the adoption of the techniques currently researched in the field of 3D reconstruction to get a compact 3D representation of the scene which can really free the viewpoint of the user for enhanced 3D navigation. The target in this workpackage is the research of efficient geometry-based representations for the data captured in multicamera setups.

The recently introduced (October 2010) and current widespread availability of consumer depth sensors such as MS Kinect (TM), has fostered a significant interest thanks to their ability to capture 3D data low-cost. Applications in Robotics, 3D reconstruction, object recognition, pose and gesture analyis and for some initial applications in AV production. The combination of data acquired with multiple visual and depth sensor offers significant potential for all these applications.

Publications

|

. Stochastic optimization and interactive machine learning for human motion analysis . Signal Theory and Communications. 2014 . |

|

. Articulated Models for Human Motion Analysis . 2012 . |

Demos and Resources

|

Free viewpoint video (FVV) from multiview data | Demo |

|

Panoramic TV Control | Demo |

|

ColorTip | Dataset |

|

|

Kinect database. Foreground segmentation | Dataset |

Collaborators

| Josep Pujal | Systems Engineer | josep.pujal@upc.edu |

| Josep R. Casas | Associate Professor | josep.ramon.casas@upc.edu |

| Albert Gil Moreno | Software Engineer | albert.gil@upc.edu |

| Javier Ruiz Hidalgo | Associate Professor | j.ruiz@upc.edu |

| Adolfo López | PhD Candidate | adolf.lopez@upc.edu |

| Marc Maceira | PhD Candidate | marc.maceira@upc.edu |

| Xavier Suau | PhD Candidate | xavier.suau@upc.edu |

| Marcel Alcoverro | PhD Candidate | marcel.alcoverro.vidal@upc.edu |

| Alba Pujol | PhD Candidate | alba.pujol@upc.edu |