The lack of knowledge of the micromobility regulations by e-scooter users is an important factor behind some of\ the accidents involving these vehicles. We present two modules that can increase the safety of the users and\ pedestrians: First, a computer vision model that analyses the video feed captured with a smartphone attached to\ the e-scooter, and predicts in real-time the type of lane in which the user is riding. This knowledge is used by an\ application which combines this information with GSNN location information and a database of mobility\ regulations, and informs the user when he/she is not complying with these regulations. Second, an accident\ detection system, using the smartphone accelerometer, that detects if there is a fall during the riding, so that the\ app can contact the authorities to determine the appropriate response. The experimental results show excellent\ results for both modules.

}, keywords = {accident detection, computer Vision, deep learning, lane classification, Micromobility, RideSafeUM}, author = {Morros, J.R. and Broquetas, A. and Mateo, A. and Puig, J. and Davins, M.} } @article {cBellver-Bueno20, title = {RefVOS: A Closer Look at Referring Expressions for Video Object Segmentation}, journal = {Multimedia Tools and Applications}, year = {2022}, month = {07/2022}, abstract = {The task of video object segmentation with referring expressions (language-guided VOS) is to, given a linguistic phrase and a video, generate binary masks for the object to which the phrase refers. Our work argues that existing benchmarks used for this task are mainly composed of trivial cases, in which referents can be identified with simple phrases. Our analysis relies on a new categorization of the phrases in the DAVIS-2017 and Actor-Action datasets into trivial and non-trivial REs, with the non-trivial REs annotated with seven RE semantic categories. We leverage this data to analyze the results of RefVOS, a novel neural network that obtains competitive results for the task of language-guided image segmentation and state of the art results for language-guided VOS. Our study indicates that the major challenges for the task are related to understanding motion and static actions.

\

Wendelstein 7-X (W7-X) is the leading experiment on the path of demonstrating that stellarators are a feasible concept for a future power plant. One of its main goals is to prove quasi-steady-state operation in a reactor-relevant parameter regime. To this end, the surveillance and protection of the water-cooled plasma facing components (PFCs) against overheating is fundamental to guarantee a safe steady-state high-heat-flux operation. The fast reaction times required to prevent damage to the device makes imperative to fully automate the analysis of the thermographic images of the PFCs to detect the thermal events in real-time and timely interrupt operation through the interlock system if a critical event is detected. This imaging system, being the last line of defense, must be based on highly reliable image and risk analysis techniques to guarantee the integrity of the device under all circumstances. During the past operation phases, W7-X was equipped with inertially-cooled test divertor units and although the system still required manual supervision, several concepts to deal with the intrinsic 3D geometry of stellarators were put under test. With the experience gained, a new PFC protection system has been designed based on advanced image segmentation techniques. In order to achieve steady-state operation, however, a feedback control system able to prevent unnecessary interruptions of plasma operation is needed. The feedback control shall be guided by the image analysis, requiring a high-level scene understanding by means of computer vision and machine learning algorithms. Based on this analysis, the feedback control can then take the most effective counter measures to prevent the PFCs overloading by acting on the heating systems, the strike-line shape and positioning or on plasma detachment. In this work, we present the design of the real-time image analysis system for the protection of the PFCs of W7-X through the Interlock, and its validation results based on the analysis of the extensive existing experimental data. Additionally, we present the roadmap towards a feedback control system for the protection of the device in long high-performance plasmas.

}, url = {https://www.clpu.es/en/ECPD2021_Schedule/}, author = {Puig-Sitjes, A. and Jakubowski, M. and Gao, Y. and Drewelow, P. and Niemann, H. and Fellinger, J. and Moncada, V. and Pisano, F. and Belafdil, C. and Mitteau, R. and Aumeunier, M.-H. and Cannas, B. and Casas, J. and Salembier, P. and Clemente, R. and Dumke, S. and Winter, A. and Laqua, H. and Bluhm, T. and Brandt, K.} } @article {aPuig-Sitjes21, title = {Real-time detection of overloads on the plasma-facing components of Wendelstein 7-X}, journal = {Applied sciences (Basel)}, volume = {11}, year = {2021}, month = {12/2021}, chapter = {1}, issn = {2076-3417}, doi = {10.3390/app112411969}, url = {http://hdl.handle.net/2117/361558}, author = {Puig-Sitjes, A. and Jakubowski, M. and Naujoks, D. and Gao, Y. and Drewelow, P. and Niemann, H. and Felinger, J. and Casas, J. and Salembier, P. and Clemente, R.} } @conference {cPardas20, title = {Refinement network for unsupervised on the scene foreground segmentation}, booktitle = {EUSIPCO European Signal Processing Conference}, year = {2020}, month = {08/2020}, publisher = {European Association for Signal Processing (EURASIP)}, organization = {European Association for Signal Processing (EURASIP)}, abstract = {In this paper we present a network for foreground segmentation based on background subtraction which does not require specific scene training. The network is built as a refinement step on top of classic state of the art background subtraction systems. In this way, the system combines the possibility to define application oriented specifications as background subtraction systems do, and the highly accurate object segmentation abilities of deep learning systems. The refinement system is based on a semantic segmentation network. The network is trained on a common database and is not fine-tuned for the specific scenes, unlike existing solutions for foreground segmentation based on CNNs. Experiments on available databases show top results among unsupervised methods.

}, url = {https://www.eurasip.org/Proceedings/Eusipco/Eusipco2020/pdfs/0000705.pdf}, author = {M. Pard{\`a}s and G. Canet} } @conference {cHerrera-Palacioa, title = {Recurrent Instance Segmentation using Sequences of Referring Expressions}, booktitle = {NeurIPS workshop on Visually Grounded Interaction and Language (ViGIL)}, year = {2019}, month = {09/2019}, address = {Vancouver, Canada}, abstract = {The goal of this work is segmenting the objects in an image which are referred to by a sequence of linguistic descriptions (referring expressions). We propose a deep neural network with recurrent layers that output a sequence of binary masks, one for each referring expression provided by the user. The recurrent layers in the architecture allow the model to condition each predicted mask on the previous ones, from a spatial perspective within the same image. Our multimodal approach uses off-the-shelf architectures to encode both the image and the referring expressions. The visual branch provides a tensor of pixel embeddings that are concatenated with the phrase embeddings produced by a language encoder. Our experiments on the RefCOCO dataset for still images indicate how the proposed architecture successfully exploits the sequences of referring expressions to solve a pixel-wise task of instance segmentation.

\

The goal of this work is segmenting the object in an image or video which is referred to by a linguistic description (referring expression).\ We propose a deep neural network with recurrent layers that output a sequence of binary masks, one for each referring expression provided by the user.\ The recurrent layers in the architecture allow the model to condition each predicted mask on the previous ones, from a spatial perspective within the same image.\ Our multimodal approach uses off-the-shelf architectures to encode both the image and the referring expressions.\ The visual branch provides a tensor of pixel embeddings that are concatenated with the phrase embeddings produced by a language encoder.\ We focus our study on comparing different configurations to encode and combine the visual and linguistic representations.\ Our experiments on the RefCOCO dataset for still images indicate how the proposed architecture successfully exploits the referring expressions to solve a pixel-wise task of instance segmentation.

\

Geometric 3D scene classification is a very challenging task. Current methodologies extract the geometric information using only a depth channel provided by an RGBD sensor. These kinds of methodologies introduce possible errors due to missing local geometric context in the depth channel. This work proposes a novel Residual Attention Graph Convolutional Network that exploits the intrinsic geometric context inside a 3D space without using any kind of point features, allowing the use of organized or unorganized 3D data. Experiments are done in NYU Depth v1 and SUN-RGBD datasets to study the different configurations and to demonstrate the effectiveness of the proposed method. Experimental results show that the proposed method outperforms current state-of-the-art in geometric 3D scene classification tasks.\

}, doi = {10.1109/ICCVW.2019.00507}, url = {https://imatge-upc.github.io/ragc/}, author = {Mosella-Montoro, Albert and Ruiz-Hidalgo, J.} } @conference {cGullon, title = {Retinal lesions segmentation using CNNs and adversarial training}, booktitle = {International Symposium on Biomedical Imaging (ISBI 2019)}, year = {2019}, month = {04/2019}, abstract = {Diabetic retinopathy (DR) is an eye disease associated with diabetes mellitus that affects retinal blood vessels. Early detection is crucial to prevent vision loss. The most common method for detecting the disease is the analysis of digital fundus images, which show lesions of small vessels and functional abnormalities.

Manual detection and segmentation of lesions is a time-consuming task requiring proficient skills. Automatic methods for retinal image analysis could help ophthalmologists in large-scale screening programs of population with diabetes mellitus allowing cost-effective and accurate diagnosis.

In this work we propose a fully convolutional neural network with adversarial training to automatically segment DR lesions in funduscopy images.\

}, author = {Nat{\`a}lia Gull{\'o}n and Ver{\'o}nica Vilaplana} } @conference {cVenturaa, title = {RVOS: End-to-End Recurrent Network for Video Object Segmentation}, booktitle = {CVPR}, year = {2019}, month = {06/2019}, publisher = {OpenCVF / IEEE}, organization = {OpenCVF / IEEE}, address = {Long Beach, CA, USA}, abstract = {Multiple object video object segmentation is a challenging task, specially for the zero-shot case, when no object mask is given at the initial frame and the model has to find the objects to be segmented along the sequence. In our work, we propose RVOS, a recurrent network that is fully end-to-end trainable for multiple object video object segmentation, with a recurrence module working on two different domains: (i) the spatial, which allows to discover the different object instances within a frame, and (ii) the temporal, which allows to keep the coherence of the segmented objects along time. We train RVOS for zero-shot video object segmentation and are the first ones to report quantitative results for DAVIS-2017 and YouTube-VOS benchmarks. Further, we adapt RVOS for one-shot video object segmentation by using the masks obtained in previous time-steps as inputs to be processed by the recurrent module. Our model reaches comparable results to state-of-the-art techniques in YouTube-VOS benchmark and outperforms all previous video object segmentation methods not using online learning in the DAVIS-2017 benchmark.

\

\

We present a recurrent model for semantic instance segmentation that sequentially generates pairs of masks and their associated class probabilities for every object in an image. Our proposed system is trainable end-to-end, does not require post-processing steps on its output and is conceptually simpler than current methods relying on object proposals. We observe that our model learns to follow a consistent pattern to generate object sequences, which correlates with the activations learned in the encoder part of our network. We achieve competitive results on three different instance segmentation benchmarks (Pascal VOC 2012, Cityscapes and CVPPP Plant Leaf Segmentation).

We present a recurrent model for semantic instance segmentation that sequentially generates binary masks and their associated class probabilities for every object in an image. Our proposed system is trainable end-to-end from an input image to a sequence of labeled masks and, compared to methods relying on object proposals, does not require post-processing steps on its output. We study the suitability of our recurrent model on three different instance segmentation benchmarks, namely Pascal VOC 2012, CVPPP Plant Leaf Segmentation and Cityscapes. Further, we analyze the object sorting patterns generated by our model and observe that it learns to follow a consistent pattern, which correlates with the activations learned in the encoder part of our network.

}, author = {Amaia Salvador and M{\'\i}riam Bellver and Baradad, Manel and V{\'\i}ctor Campos and Marqu{\'e}s, F. and Jordi Torres and Xavier Gir{\'o}-i-Nieto} } @mastersthesis {xFojo, title = {Reproducing and Analyzing Adaptive Computation Time in PyTorch and TensorFlow}, year = {2018}, abstract = {The complexity of solving a problem can differ greatly to the complexity of posing that problem. Building a Neural Network capable of dynamically adapting to the complexity of the inputs would be a great feat for the machine learning community. One of the most promising approaches is Adaptive Computation Time for Recurrent Neural Network (ACT) \parencite{act}. In this thesis, we implement ACT in two of the most used deep learning frameworks, PyTorch and TensorFlow. Both are open source and publicly available. We use this implementations to evaluate the capability of ACT to learn algorithms from examples. We compare ACT with a proposed baseline where each input data sample of the sequence is read a fixed amount of times, learned as a hyperparameter during training. Surprisingly, we do not observe any benefit from ACT when compared with this baseline solution, which opens new and unexpected directions for future research.

Convolutional Neural Networks (CNNs) are frequently used to tackle image classification and segmentation problems due to its recently proven successful results. In particular, in medical domain, it is more and more common to see automated techniques to help doctors in their diagnosis. In this work, we study the retinal lesions segmentation problem using CNNs on the Indian Diabetic Retinopathy Image Dataset (IDRiD). Additionally, the idea of adversarial training used by Generative Adversarial Networks (GANs) will be also added to the previous CNN to improve its results, making segmentation maps more accurate and realistic. A comparison between these two architectures will be made. One of the main challenges we will be facing is the high-imbalance between lesions and healthy parts of the retina and the fact that some lesion classes are very scattered in small fractions. Thus, different loss functions, optimizers and training schemes will be studied and evaluated to see which one best addresses our problem.

}, author = {Nat{\`a}lia Gull{\'o}n}, editor = {Ver{\'o}nica Vilaplana} } @conference {xSalvadora, title = {Recurrent Semantic Instance Segmentation}, booktitle = {NIPS 2017 Women in Machine Learning Workshop (WiML)}, year = {2017}, month = {12/2017}, publisher = {NIPS 2017 Women in Machine Learning Workshop}, organization = {NIPS 2017 Women in Machine Learning Workshop}, address = {Long Beach, CA, USA}, abstract = {We present a recurrent model for end-to-end instance-aware semantic segmentation that is able to sequentially generate pairs of masks and class predictions. Our proposed system is trainable end-to-end for instance segmentation, does not require further post-processing steps on its output and is conceptually simpler than current methods relying on object proposals. While recent works have proposed recurrent architectures for instance segmentation, these are trained and evaluated for a single category.

Our model is composed of a series of Convolutional LSTMs that are applied in chain with upsampling layers in between to predict a sequence of binary masks and associated class probabilities. Skip connections are incorporated in our model by concatenating the output of the corresponding convolutional layer in the base model with the upsampled output of the ConvLSTM. Binary masks are finally obtained with a 1x1 convolution with sigmoid activation. We concatenate the side outputs of all ConvLSTM layers and apply a per-channel max-pooling operation followed by a single fully-connected layer with softmax activation to obtain the category for each predicted mask.

We train and evaluate our models with the Pascal VOC 2012 dataset. Future work will aim at analyzing and understanding the behavior of the network on other datasets, comparing the system with state of the art solutions and study the relationship of the learned object discovery patterns of our model with those of humans.

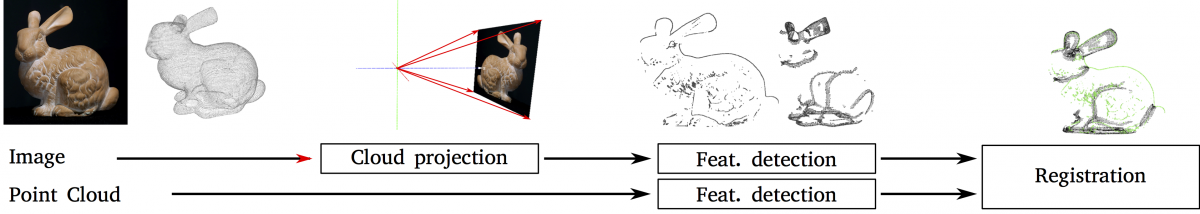

}, author = {Amaia Salvador and Baradad, Manel and Xavier Gir{\'o}-i-Nieto and Marqu{\'e}s, F.} } @conference {cPujol-Miro17, title = {Registration of Images to Unorganized 3D Point Clouds Using Contour Cues}, booktitle = {The 25th European Signal Processing Conference (EUSIPCO 2017)}, year = {2017}, month = {08/2017}, publisher = {Eurasip}, organization = {Eurasip}, address = {Kos island, Greece}, abstract = {

Low resolution commercial 3D sensors contribute to computer vision tasks even better when the analysis is carried out in a combination with higher resolution image data. This requires registration of 2D images to unorganized 3D point clouds. In this paper we present a framework for 2D-3D data fusion to obtain directly the camera pose of a 2D color image in relation to a 3D point cloud. It includes a novel multiscale intensity feature detection algorithm and a modified ICP procedure based on point-to-line distances. The framework is generic for several data types (such as CAD designs or LiDAR data without photometric information), and results show that performance is comparable to the state of the art, while avoiding manual markers or specific patterns on the data.

}, keywords = {Cameras, Feature extraction, Iterative closest point algorithm, Sensors, Signal processing algorithms, Three-dimensional displays}, doi = {10.23919/EUSIPCO.2017.8081173}, url = {https://www.eusipco2017.org/}, author = {A. Pujol-Mir{\'o} and Ruiz-Hidalgo, J. and Casas, J.} } @phdthesis {dVaras16, title = {Region-based Particle Filter Leveraged with a Hierarchical Co-clustering}, year = {2016}, month = {11/2016}, school = {UPC}, type = {PhD}, address = {Barcelona}, abstract = {In this thesis, we exploit the hierarchical information associated with images to tackle two fundamental problems of computer vision: video object segmentation and video segmentation.

In the first part of the thesis, we present a video object segmentation approach that extends the well known particle filter algorithm to a region based image representation. Image partition is considered part of the particle filter measurement, which enriches the available information and leads to a reformulation of the particle filter theory. We define particles as unions of regions in the current image partition and their propagation is computed through a single optimization process. During this propagation, the prediction step is performed using a co-clustering between the previous image object partition and a partition of the current one, which allows us to tackle the evolution of non-rigid structures.

The second part of the thesis is devoted to the exploration of a co-clustering technique for video segmentation. This technique, given a collection of images and their associated hierarchies, clusters nodes from these hierarchies to obtain a coherent multiresolution representation of the image collection. We formalize the co-clustering as a Quadratic Semi-Assignment Problem and solve it with a linear programming relaxation approach that makes e ffective use of information from hierarchies. Initially, we address the problem of generating an optimal, coherent partition per image and, afterwards, we extend this method to a multiresolution framework. Finally, we particularize this framework to an iterative multiresolution video segmentation algorithm in sequences with small variations.

Finally, in the last part of the thesis we validate the presented techniques for object and video segmentation using the proposed algorithms as tools to tackle problems in a context for which they were not initially thought.

}, author = {David Varas}, editor = {Marqu{\'e}s, F.} } @mastersthesis {xPorta, title = {Rapid Serial Visual Presentation for Relevance Feedback in Image Retrieval with EEG Signals}, year = {2015}, abstract = {Studies: Bachelor degree in Science and Telecommunication Technologies Engineering at\ Telecom BCN-ETSETB\ from the Technical University of Catalonia (UPC)

Grade: A (9/10)

This thesis explores the potential of relevance feedback for image retrieval using EEG signals for human-computer interaction. This project aims at studying the optimal parameters of a rapid serial visual presentation (RSVP) of frames from a video database when the user is searching for an object instance. The simulations reported in this thesis assess the trade-off between using a small or a large amount of images in each RSVP round that captures the user feedback. While short RSVP rounds allow a quick learning of the user intention from the system, RSVP rounds must also be long enough to let users generate the P300 EEG signals which are triggered by relevant images. This work also addresses the problem of how to distribute potential relevant and non-relevant images in a RSVP round to maximize the probabilities of displaying each relevant frame separated at least 1 second from another relevant frame, as this configuration generates a cleaner P300 EEG signal. The presented simulations are based on a realistic set up for video retrieval with a subset of 1,000 frames from the TRECVID 2014 Instance Search task.

}, keywords = {eeg, feedback, image, relevance, retrieval}, author = {Porta, Sergi}, editor = {Amaia Salvador and Mohedano, Eva and Xavier Gir{\'o}-i-Nieto and O{\textquoteright}Connor, N.} } @mastersthesis {xLopez15, title = {Reconstrucci{\'o} de la forma del rostre a partir de contorns}, year = {2015}, abstract = {La reconstrucci{\'o} i el modelatge de cares 3D s{\textquoteright}han convertit en els {\'u}ltims anys en una l{\'\i}nia d{\textquoteright}investigaci{\'o} molt activa a causa de la seva utilitzaci{\'o} en gran nombre d{\textquoteright}aplicacions com s{\'o}n el reconeixement facial en 3D, modelatge de cares en videojocs, cirurgia est{\`e}tica, etc. Durant les {\'u}ltimes d{\`e}cades s{\textquoteright}han desenvolupat m{\'u}ltiples t{\`e}cniques computacionals de reconstrucci{\'o} facial 3D. Una de les tecnologies m{\'e}s utilitzades est{\`a} basada en imatges 2D i m{\`e}todes estad{\'\i}stics (3D Morphable Models). L{\textquoteright}estimaci{\'o} de la forma de la cara abordada amb models estad{\'\i}stics, t{\'e} com a objectiu principal trobar un conjunt de par{\`a}metres de la cara que millor s{\textquoteright}ajusten a una imatge o un conjunt d{\textquoteright}imatges. Per a aquest projecte es disposa d{\textquoteright}un model estad{\'\i}stic capa{\c c} d{\textquoteright}estimar la forma 3D del rostre quan una cara o un conjunt de contorns 2D est{\`a} disponible des de m{\'u}ltiples punts de vista. Aquest model estima directament l{\textquoteright}estructura de la cara 3D mitjan{\c c}ant l{\textquoteright}{\'u}s d{\textquoteright}una matriu de regressi{\'o} constru{\"\i}da a trav{\'e}s de PLS (Partial Least Squares). Despr{\'e}s de la validaci{\'o} del model i els bons resultats obtinguts en la predicci{\'o} de subjectes sint{\`e}tics, aquest treball proposa un nou enfoc, entrenar el model amb subjectes reals a partir d{\textquoteright}una seq{\"u}{\`e}ncia de v{\'\i}deo. D{\textquoteright}aquesta forma s{\textquoteright}obtenen reconstruccions de la forma de la cara 3D amb dades reals. Per tant, l{\textquoteright}objectiu d{\textquoteright}aquest projecte {\'e}s la definici{\'o}, la implementaci{\'o} software i l{\textquoteright}an{\`a}lisi d{\textquoteright}un procediment que ens permeti ajustar un model estad{\'\i}stic facial tridimensional gen{\`e}ric a les caracter{\'\i}stiques facials espec{\'\i}fiques d{\textquoteright}un individu a partir dels contorns del seu rostre.

}, author = {Laia del Pino L{\'o}pez}, editor = {Ver{\'o}nica Vilaplana and Josep Ramon Morros} } @conference {cMaceira15, title = {Region-based depth map coding using a 3D scene representation}, booktitle = {IEEE International Conference on Acoustics, Speech and Signal Processing}, year = {2015}, month = {04/2015}, address = {Brisbane, Australia}, abstract = {In 3D video, view synthesis is used to process new virtual views between encoded camera views. Errors in the coding of the depth maps introduce geometry inconsistencies in synthesized views. In this paper, a 3D plane representation of the scene is presented which improve the performance of current standard video codecs in the view synthesis domain. Depth maps are segmented into regions without sharp edges and represented with a plane in the 3D world scene coordinates. This 3D representation allows an efficient representation while preserving the 3D characteristics of the scene. Experimental results are provided obtaining gains from 10 to 40 \% in bitrate compared to HEVC.

}, author = {Maceira, M. and Morros, J.R. and Ruiz-Hidalgo, J.} } @mastersthesis {xGirbau15, title = {Region-based Particle Filter}, year = {2015}, abstract = {In this project the implementation of a video object tracking technique based on a particle filter that uses the partitions of the various frames in the video has been tackled. This is an extension of the standard particle filter tracker in which unions of regions of the image are used to generate particles. By doing so, the tracking of the object of interest through the video sequence is expected to be done in a more accurate and robust way. One of the main parts of this video object tracker is a co-clustering technique that allows having an initial estimation of the object in the current frame, relying on the instance of the same object in a previous frame. While developing the object tracker, we realized the importance of this co-clustering technique, not only in the context of the current video tracker but as a basic tool for several of the research projects in the image group. Therefore, we decided to concentrate on the implementation of a generic, versatile co-clustering technique instead of the simple version that was necessary for the tracking problem. This way, the main goal of this project consists on implementing the co-clustering method presented in an accurate way while presenting a low computation time. Moreover, the complete Region-based particle filter for tracking purposes is presented. Therefore, the aim of this Final Degree Project is, mainly, to give a guideline to future researchers who will use this algorithm; to help understand and apply the mentioned co-clustering for any project in need of this method.

}, url = {http://upcommons.upc.edu/bitstream/handle/2099.1/25370/Andreu_Girbau_Xalabarder_TFG.pdf?sequence=4\&isAllowed=y}, author = {Girbau Xalabarder, A.}, editor = {David Varas and Marqu{\'e}s, F.} } @mastersthesis {xBosch, title = {Region-oriented Convolutional Networks for Object Retrieval}, year = {2015}, abstract = {Advisors: Amaia Salvador and\ Xavier Gir{\'o}-i-Nieto\ (UPC)\

Study program: Engineering on Audiovisual Systems (4 years) at Escola d{\textquoteright}Enginyeria de Terrassa\ (UPC)

Grade: A (9.6/10)

This thesis is framed in the computer vision field, addressing a challenge related to instance search. Instance search consists in searching for occurrences of a certain visual instance on a large collection of visual content, and generating a ranked list of results sorted according to their relevance to a user query. This thesis builds up on existing work presented at the TRECVID Instance Search Task in 2014, and explores the use of local deep learning features extracted from object proposals. The performance of different deep learning architectures (at both global and local scales) is evaluated, and a thorough comparison of them is performed. Secondly, this thesis presents the guidelines to follow in order to fine-tune a convolutional neural network for tasks such as image classification, object detection and semantic segmentation. It does so with the final purpose of fine tuning SDS, a CNN trained for both object detection and semantic segmentation, with the recently released Microsoft COCO dataset.

\

A method to obtain accurate hand gesture classification and fingertip localization from depth images is proposed. The Oriented Radial Distribution feature is utilized, exploiting its ability to globally describe hand poses, but also to locally detect fingertip positions. Hence, hand gesture and fingertip locations are characterized with a single feature calculation. We propose to divide the difficult problem of locating fingertips into two more tractable problems, by taking advantage of hand gesture as an auxiliary variable. Along with the method we present the ColorTip dataset, a dataset for hand gesture recognition and fingertip classification using depth data. ColorTip contains sequences where actors wear a glove with with colored fingertips, allowing automatic annotation. The proposed method is evaluated against recent works in several datasets, achieving promising results in both gesture classification and fingertip localization.

}, keywords = {dataset, fingertip classification, hand gesture recognition, interactivity, range camera}, issn = {0262-8856}, doi = {10.1016/j.imavis.2014.04.015}, url = {http://www.sciencedirect.com/science/article/pii/S0262885614000845}, author = {Suau, X. and Alcoverro, M. and L{\'o}pez-M{\'e}ndez, A. and Ruiz-Hidalgo, J. and Casas, J.} } @conference {cVaras14, title = {Region-based Particle Filter for Video Object Segmentation}, booktitle = {CVPR - Computer Vision and Pattern Recognition}, year = {2014}, publisher = {IEEE}, organization = {IEEE}, address = {Ohio}, abstract = {We present a video object segmentation approach that\ extends the particle filter to a region-based image representation. Image partition is considered part of the particle filter measurement, which enriches the available information and leads to a re-formulation of the particle filter.\ The prediction step uses a co-clustering between the previous image object partition and a partition of the current\ one, which allows us to tackle the evolution of non-rigid\ structures. Particles are defined as unions of regions in the\ current image partition and their propagation is computed\ through a single co-clustering. The proposed technique is\ assessed on the SegTrack dataset, leading to satisfactory\ perceptual results and obtaining very competitive pixel error rates compared with the state-of-the-art methods.

}, keywords = {co-clustering, particle filter, segmentation, tracking}, author = {David Varas and Marqu{\'e}s, F.} } @article {aSalembier14, title = {Remote sensing image processing with graph cut of Binary Partition Trees}, journal = {Advances in computing science, control and communications}, volume = {69}, year = {2014}, month = {04/2014}, pages = {185-196}, issn = {1870-4069}, author = {Salembier, P. and S. Foucher} } @conference {cGallego14, title = {Robust 3D SFS reconstruction based on reliability maps}, booktitle = {ICIP, IEEE International Conference on Image Processing}, year = {2014}, month = {10/2014}, abstract = {This paper deals with Shape from Silhouette (SfS) volumetric reconstruction in the context of multi-view smart room scenarios. The method that we propose first computes a 2D foreground object segmentation in each one of the views, by using region-based models to model the foreground, and shadow classes, and a pixel-wise model to model the background class. Next, we calculate the reliability maps between foreground and background/shadow classes in each view, by computing the hellinger distance among models. These 2D reliability maps are taken into account finally, in the 3D SfS reconstruction algorithm, to obtain an enhanced final volumetric reconstruction. The advantages of our system rely on the possibility to obtain a volumetric representation which automatically defines the optimal tolerance to errors for each one of the voxels of the volume, with a low rate of false positive and false negative errors. The results obtained by using our proposal improve the traditional SfS reconstruction computed with a fixed tolerance for the overall volume.

}, author = {Gallego, J. and M. Pard{\`a}s} } @article {aMolina11 , title = {Real-time user independent hand gesture recognition from time-of-flight camera video using static and dynamic models}, journal = {Machine vision and applications}, volume = {24}, year = {2013}, month = {08/2011}, pages = {187{\textendash}204}, chapter = {187}, abstract = {The use of hand gestures offers an alternative to the commonly used human computer interfaces, providing a more intuitive way of navigating among menus and multimedia applications. This paper presents a system for hand gesture recognition devoted to control windows applications. Starting from the images captured by a time-of-flight camera (a camera that produces images with an intensity level inversely proportional to the depth of the objects observed) the system performs hand segmentation as well as a low-level extraction of potentially relevant features which are related to the morphological representation of the hand silhouette. Classification based on these features discriminates between a set of possible static hand postures which results, combined with the estimated motion pattern of the hand, in the recognition of dynamic hand gestures. The whole system works in real-time, allowing practical interaction between user and application.

}, issn = {0932-8092}, doi = {10.1007/s00138-011-0364-6}, url = {http://www.springerlink.com/content/062m51v58073572h/fulltext.pdf}, author = {Molina, J. and Escudero-Vi{\~n}olo, M. and Signorelo, A. and M. Pard{\`a}s and Ferran, C. and Bescos, J. and Marqu{\'e}s, F. and Mart{\'\i}nez, J.} } @article {aGallego13, title = {Region Based Foreground Segmentation Combinig Color and Depth Sensors Via Logarithmic Opinion Pool Decision}, journal = {Journal of Visual Communication and Image Representation}, year = {2013}, month = {04/2013}, abstract = {In this paper we present a novel foreground segmentation system that combines color and depth sensors information to perform a more complete Bayesian segmentation between foreground and background classes. The system shows a combination of spatial-color and spatial-depth region-based models for the foreground as well as color and depth pixel-wise models for the background in a Logarithmic Opinion Pool decision framework used to correctly combine the likelihoods of each model. A posterior enhancement step based on a trimap analysis is also proposed in order to correct the precision errors that the depth sensor introduces. The results presented in this paper show that our system is robust in front of color and depth camouflage problems between the foreground object and the background, and also improves the segmentation in the area of the objects\u2019 contours by reducing the false positive detections that appear due to the lack of precision of the depth sensors.

}, doi = {http://dx.doi.org/10.1016/j.jvcir.2013.03.019}, url = {http://www.sciencedirect.com/science/article/pii/S104732031300059X}, author = {Gallego, J. and M. Pard{\`a}s} } @inbook {bLeon13, title = {Region-based caption text extraction}, booktitle = {Lecture Notes in Electrical Engineering (Analysis, Retrieval and Delivery of Multimedia Content)}, volume = {158}, year = {2013}, month = {07/2012}, pages = {21-36}, publisher = {Springer}, organization = {Springer}, address = {New York}, abstract = {This chapter presents a method for caption text detection. The proposed method will be included in a generic indexing system dealing with other semantic concepts which are to be automatically detected as well. To have a coherent detection system, the various object detection algorithms use a common image description, a hierarchical region-based image model. The proposed method takes advantage of texture and geometric features to detect the caption text. Texture features are estimated using wavelet analysis and mainly applied for\ text candidate spotting. In turn,\ text characteristics verification\ relies on geometric features, which are estimated exploiting the region-based image model. Analysis of the region hierarchy provides the final caption text objects. The final step of\ consistency analysis for output\ is performed by a binarization algorithm that robustly estimates the thresholds on the caption text area of support.

}, keywords = {Text detection}, isbn = {978-1-4614-3830-4}, doi = {10.1007/978-1-4614-3831-1_2}, author = {Le{\'o}n, M. and Ver{\'o}nica Vilaplana and Gasull, A. and Marqu{\'e}s, F.} } @article {aSuau12, title = {Real-time head and hand tracking based on 2.5D data}, journal = {IEEE Transactions on Multimedia }, volume = {14}, year = {2012}, month = {06/2012}, pages = {575-585 }, abstract = {A novel real-time algorithm for head and hand tracking is proposed in this paper. This approach is based on data from a range camera, which is exploited to resolve ambiguities and overlaps. The position of the head is estimated with a depth-based template matching, its robustness being reinforced with an adaptive search zone. Hands are detected in a bounding box attached to the head estimate, so that the user may move freely in the scene. A simple method to decide whether the hands are open or closed is also included in the proposal. Experimental results show high robustness against partial occlusions and fast movements. Accurate hand trajectories may be extracted from the estimated hand positions, and may be used for interactive applications as well as for gesture classification purposes.

}, issn = {1520-9210}, doi = {http://dx.doi.org/10.1109/TMM.2012.2189853}, author = {Suau, X. and Ruiz-Hidalgo, J. and Casas, J.} } @conference {cVaras12a, title = {A Region-Based Particle Filter for Generic Object Tracking and Segmentation}, booktitle = {ICIP - International Conference on Image Processing}, year = {2012}, month = {09/2012}, address = {Orlando}, abstract = {In this work we present a region-based particle filter for generic object tracking and segmentation. The representation of the object in terms of regions homogeneous in color allows the proposed algorithm to robustly track the object and accurately segment its shape along the sequence. Moreover, this segmentation provides a mechanism to update the target model and allows the tracker to deal with color and shape variations of the object. The performance of the algorithm has been tested using the LabelMe Video public database.

The experiments show satisfactory results in both tracking and segmentation of the object without an important increase of the computational time due to an efficient computation of the image partition.\

This paper describes a system developed for the semi- automatic annotation of keyframes in a broadcasting company. The tool aims at assisting archivists who traditionally label every keyframe manually by suggesting them an automatic annotation that they can intuitively edit and validate. The system is valid for any domain as it uses generic MPEG-7 visual descriptors and binary SVM classifiers. The classification engine has been tested on the multiclass problem of semantic shot detection, a type of metadata used in the company to index new con- tent ingested in the system. The detection performance has been tested in two different domains: soccer and parliament. The core engine is accessed by a Rich Internet Application via a web service. The graphical user interface allows the edition of the suggested labels with an intuitive drag and drop mechanism between rows of thumbnails, each row representing a different semantic shot class. The system has been described as complete and easy to use by the professional archivists at the company.

We present a real-time human body tracking system for a single user in a Smart Room scenario. In this paper we propose a novel system that involves a silhouette-based cost function using variable windows, a hierarchical optimization method, parallel implementations of pixel-based algorithms and efficient usage of a low-cost hardware structure. Results in a Smart Room setup are presented.

}, isbn = {978-1-61284-349-0}, doi = {10.1109/ICME.2011.6011847}, url = {http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=\&arnumber=6011847}, author = {Alcoverro, M. and L{\'o}pez-M{\'e}ndez, A. and Casas, J. and M. Pard{\`a}s} } @conference {cSuau11, title = {Real-time head and hand tracking based on 2.5D data}, booktitle = {ICME - 2011 IEEE International Conference on Multimedia and Expo}, year = {2011}, pages = {1{\textendash}6}, abstract = {A novel real-time algorithm for head and hand tracking is proposed in this paper. This approach is based on 2.5D data from a range camera, which is exploited to resolve ambiguities and overlaps. Experimental results show high robustness against partial occlusions and fast movements. The estimated positions are fairly stable, allowing the extraction of accurate trajectories which may be used for gesture classification purposes.

}, isbn = {975001880X}, doi = {10.1109/ICME.2011.6011869}, url = {http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=6011869\&tag=1}, author = {Suau, X. and Casas, J. and Ruiz-Hidalgo, J.} } @conference {cLopez-Mendez11a, title = {Real-time upper body tracking with online initialization using a range sensor}, booktitle = {2011 IEEE International Conference on Computer VIsion Workshops (ICCV Workshops)}, year = {2011}, pages = {391{\textendash}398}, abstract = {We present a novel method for upper body pose estimation with online initialization of pose and the anthropometric profile. Our method is based on a Hierarchical Particle Filter that defines its likelihood function with a single view depth map provided by a range sensor. We use Connected Operators on range data to detect hand and head candidates that are used to enrich the Particle Filter{\textquoteright}s proposal distribution, but also to perform an automated initialization of the pose and the anthropometric profile estimation. A GPU based implementation of the likelihood evaluation yields real-time performance. Experimental validation of the proposed algorithm and the real-time implementation are provided, as well as a comparison with the recently released OpenNI tracker for the Kinect sensor.

}, isbn = {978-1-4673-0063-6/11}, doi = {10.1109/ICCVW.2011.6130268}, url = {http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=6130268}, author = {L{\'o}pez-M{\'e}ndez, A. and Alcoverro, M. and M. Pard{\`a}s and Casas, J.} } @mastersthesis {xAlfaro11 , title = {Reordenaci{\'o} i agrupament d{\textquoteright}imatges d{\textquoteright}una cerca de v{\'\i}deo}, year = {2011}, month = {01/2011}, abstract = {La recuperaci{\'o} de v{\'\i}deo a trav{\'e}s de consultes textuals es una practica molt com{\'u} en els arxius de radiodifusi{\'o}. Les paraules clau de les consultes son comparades amb les metadades que s{\textquoteright}anoten manualment als assets de v{\'\i}deo pels documentalistes. A m{\'e}s, les cerques textuals b{\`a}siques generen llistes de resultats planes, on tots els resultats tenen la mateixa import{\`a}ncia, ja que, es limita a avaluar bin{\`a}riament si la paraula de cerca apareix o no entre les metadades associades als continguts. A m{\'e}s, acostumen a mostrar continguts molt similars, donant al usuari una llista ordenada de resultats de poca diversitat visual. La redund{\`a}ncia en els resultats provoca un malbaratament d{\textquoteright}espai a la interf{\'\i}cie gr{\`a}fica d{\textquoteright}usuari (GUI) que sovint obliga a l{\textquoteright}usuari a interactuar fortament amb la interf{\'\i}cie gr{\`a}fica fins localitzar els resultats rellevants per a la seva cerca. La aportaci{\'o} del present projecte consisteix en la presentaci{\'o} d{\textquoteright}una estrat{\`e}gia de reordenaci{\'o} i agrupaci{\'o} per obtenir keyframes de major rellev{\`a}ncia entre els primers resultats, per{\`o} al mateix temps mantenir una diversitat d{\textquoteright}assets. D{\textquoteright}aquesta forma, aquestes t{\`e}cniques permetran millorar els sistemes de visualitzaci{\'o} d{\textquoteright}imatges resultants d{\textquoteright}una cerca de v{\'\i}deo. L{\textquoteright}eina global es dissenya per ser integrada en l{\textquoteright}entorn del Digition, el gestor de continguts audiovisuals de la Corporaci{\'o} Catalana de Mitjans Audiovisuals.

This thesis describes the graphical user interface developed for semi-automatic keyframebased semantic shot annotation and the semantic shot classifiers built. The graphical user interface aims to optimize the current indexation process by substituting manual annotation for automatic annotation and validation. The system is based on supervised learning binary classifiers and web services. The graphical user interface provides the necessary tools to fix and validate the automatic detections and to learn from the user feedback to retrain the system and improve it. Results of the classifiers evaluation, performed using cross-validation methods, show a good performance in terms of precision and recall. The graphical user interface has been described as complete and easy to use by a professional documentalist at a broadcast company.

The purpose of the current work is to propose, under a statistical framework, a family of unsupervised region merging techniques providing a set of the most relevant region-based explanations of an image at different levels of analysis. These techniques are characterized by general and nonparametric region models, with neither color nor texture homogeneity assumptions, and a set of innovative merging criteria, based on information theory statistical measures. The scale consistency of the partitions is assured through i) a size regularization term into the merging criteria and a classical merging order, or ii) using a novel scale-based merging order to avoid the region size homogeneity imposed by the use of a size regularization term. Moreover, a partition significance index is defined to automatically determine the subset of most representative partitions from the created hierarchy. Most significant automatically extracted partitions show the ability to represent the semantic content of the image from a human point of view. Finally, a complete and exhaustive evaluation of the proposed techniques is performed, using not only different databases for the two main addressed problems (object-oriented segmentation of generic images and texture image segmentation), but also specific evaluation features in each case: under- and oversegmentation error, and a large set of region-based, pixel-based and error consistency indicators, respectively. Results are promising, outperforming in most indicators both object-oriented and texture state-of-the-art segmentation techniques.

}, issn = {1057-7149}, doi = {10.1109/TIP.2010.2043008}, url = {http://www.dfmf.uned.es/~daniel/www-imagen-dhp/biblio/region-merging-information.pdf}, author = {Calderero, F. and Marqu{\'e}s, F.} } @conference {cLeon10, title = {Region-based caption text extraction}, booktitle = {11th. International Workshop on Image Analysis for Multimedia Application Services}, year = {2010}, pages = {1{\textendash}4}, url = {http://ieeexplore.ieee.org/xpl/mostRecentIssue.jsp?punumber=5608542}, author = {Le{\'o}n, M. and Ver{\'o}nica Vilaplana and Gasull, A. and Marqu{\'e}s, F.} } @phdthesis {dVilaplana10, title = {Region-based face detection, segmentation and tracking. framework definition and application to other objects}, year = {2010}, school = {Universitat Polit{\`e}cnica de Catalunya (UPC)}, type = {phd}, abstract = {One of the central problems in computer vision is the automatic recognition of object classes. In particular, the detection of the class of human faces is a problem that generates special interest due to the large number of applications that require face detection as a first step. In this thesis we approach the problem of face detection as a joint detection and segmentation problem, in order to precisely localize faces with pixel accurate masks. Even though this is our primary goal, in finding a solution we have tried to create a general framework as independent as possible of the type of object being searched. For that purpose, the technique relies on a hierarchical region-based image model, the Binary Partition Tree, where objects are obtained by the union of regions in an image partition. In this work, this model is optimized for the face detection and segmentation tasks. Different merging and stopping criteria are proposed and compared through a large set of experiments. In the proposed system the intra-class variability of faces is managed within a learning framework. The face class is characterized using a set of descriptors measured on the tree nodes, and a set of one-class classifiers. The system is formed by two strong classifiers. First, a cascade of binary classifiers simplifies the search space, and afterwards, an ensemble of more complex classifiers performs the final classification of the tree nodes. The system is extensively tested on different face data sets, producing accurate segmentations and proving to be quite robust to variations in scale, position, orientation, lighting conditions and background complexity. We show that the technique proposed for faces can be easily adapted to detect other object classes. Since the construction of the image model does not depend on any object class, different objects can be detected and segmented using the appropriate object model on the same image model. New object models can be easily built by selecting and training a suitable set of descriptors and classifiers. Finally, a tracking mechanism is proposed. It combines the efficiency of the mean-shift algorithm with the use of regions to track and segment faces through a video sequence, where both the face and the camera may move. The method is extended to deal with other deformable objects, using a region-based graph-cut method for the final object segmentation at each frame. Experiments show that both mean-shift based trackers produce accurate segmentations even in difficult scenarios such as those with similar object and background colors and fast camera and object movements.

}, url = {http://hdl.handle.net/10803/33330}, author = {Ver{\'o}nica Vilaplana}, editor = {Marqu{\'e}s, F.} } @conference {cVilaplana08, title = {Region-based mean shift tracking: Application to face tracking}, booktitle = {IEEE International Conference on Image Processing}, year = {2008}, pages = {2712{\textendash}2715}, isbn = {978-1-4577-0538-0}, author = {Ver{\'o}nica Vilaplana and Marqu{\'e}s, F.} } @conference {cGiro-i-Nieto07a, title = {Region-based annotation tool using partition trees}, booktitle = {International Conference on Semantic and Digital Media Technologies}, year = {2007}, month = {12/2007}, pages = {3{\textendash}4}, address = {Genova, Italy}, abstract = {This paper presents an annotation tool for the man- ual and region-based annotation of still images. The selection of regions is achieved by navigating through a Partition Tree, a data structure that offers a multiscale representation of the image. The user interface provides a framework for the annotation of both atomic and composite semantic classes and generates an MPEG-7 XML compliant file.\

The visual hull is defined as the intersection of the visual cones formed by the back-projection of C 2D silhouettes into the 3D space. The set of 2D silhouettes is consistent if there exists at least one volume which exactly explains them. Shape from silhouette (SfS) is the general term used to refer to the techniques employed to obtain a volume from silhouettes, which are considered to be consistent. In this paper we extend the idea of SfS to be used with sets of inconsistent silhouettes resulting from inaccurate calibration and erroneous 2D silhouette extraction techniques. The method presented detects and corrects errors in the silhouettes based on the consistency principle, implying an unbiased treatment of false alarms and misses in 2D.

}, author = {Landabaso, J. and M. Pard{\`a}s and Casas, J.} } @conference {cLopez06, title = {Rotating convection: Eckhauss-Benjamin-Feir instability}, booktitle = {59th Annual Meeting of the APS Division of Fluid Dynamics}, year = {2006}, pages = {2004{\textendash}2004}, isbn = {-}, author = {Lopez, J. and Mercader, M. and Marqu{\'e}s, F. and Batiste, O.} } @conference {cVilaplana05, title = {Region-based extraction and analysis of visual objects information}, booktitle = {Fourth International Workshop on Content-Based Multimedia Indexing, CBMI 2005}, year = {2005}, address = {Riga, Letonia}, abstract = {In this paper, we propose a strategy to detect objects from\ still images that relies on combining two types of models: a\ perceptual and a structural model. The algorithms that are\ proposed for both types of models make use of a regionbased description of the image relying on a Binary Partition\ Tree. Perceptual models link the low-level signal description with semantic classes of limited variability. Structural\ models represent the common structure of all instances by\ decomposing the semantic object into simpler objects and\ by defining the relations between them using a DescriptionGraph.

}, isbn = {0-7803-6293-4}, author = {Ver{\'o}nica Vilaplana and Xavier Gir{\'o}-i-Nieto and Salembier, P. and Marqu{\'e}s, F.} } @conference {cDorea04, title = {A region-based algorithm for image segmentation and parametric motion estimation}, booktitle = {Image Analysis for Multimedia Interactive Services}, year = {2004}, isbn = {972-98115-7-1}, author = {Dorea, C. and M. Pard{\`a}s and Marqu{\'e}s, F.} } @conference {cJose-Luis04, title = {Robust Tracking and Object Classification Towards Automated Video Surveillance}, booktitle = {International Conference on Image Analysis and Recognition}, year = {2004}, pages = {46333{\textendash}470}, isbn = {3540232230}, author = {Landabaso, J. and M. Pard{\`a}s and Xu, Li-Qun} } @article {aLandabaso04, title = {Robust Tracking and Object Classification Towards Automated Video Surveillance}, journal = {Lecture notes in computer science}, volume = {3212}, year = {2004}, pages = {463{\textendash}470}, issn = {0302-9743}, author = {Landabaso, J. and Xu, Li-Qun and M. Pard{\`a}s} } @conference {cO{\textquoteright}Connor03, title = {Region and object segmentation algorithms in the Qimera segmentation platform}, booktitle = {Third International Workshop on Content-Based Multimedia Indexing}, year = {2003}, pages = {95{\textendash}103}, abstract = {In this paper we present the Qimera segmentation platform and describe the different approaches to segmentation that have been implemented in the system to date. Analysis techniques have been implemented for both region-based and object-based segmentation. The region-based segmentation algorithms include: a colour segmentation algorithm based on a modified Recursive Shortest Spanning Tree (RSST) approach, an implementation of a colour image segmentation algorithm based on the K-Means-with-Connectivity-Constraint (KMCC) algorithm and an approach based on the Expectation Maximization (EM) algorithm applied in a 6D colour/texture space. A semi-automatic approach to object segmentation that uses the modified RSST approach is outlined. An automatic object segmentation approach via snake propagation within a level-set framework is also described. Illustrative segmentation results are presented in all cases. Plans for future research within the Qimera project are also discussed.

}, isbn = {978-84-612-2373-2}, author = {O{\textquoteright}Connor, N. and Sav, S. and Adamek, T. and Mezaris, V. and Kompatsiaris, I. and Lui, T. and Izquierdo, E. and Ferran, C. and Casas, J.} } @article {aEugenio01, title = {A real-time automatic acquisition, processing and distribution system for AVHRR and SeaWIFS imagery}, journal = {IEEE geoscience electronics society newsletter}, volume = {-}, number = {Issue 20}, year = {2001}, pages = {10{\textendash}15}, issn = {0161-7869}, author = {F. Eugenio and Marcello, J. and Marqu{\'e}s, F. and Hernandez-Guerra, A. and Rovaris, E.} } @article {aVilaplana01, title = {A region-based approach to face segmentation and tracking in video sequences}, journal = {Latin american applied research}, volume = {31}, number = {2}, year = {2001}, pages = {99{\textendash}106}, issn = {0327-0793}, author = {Ver{\'o}nica Vilaplana and Marqu{\'e}s, F.} } @conference {cRuiz-Hidalgo01, title = {Robust segmentation and representation of foreground key-regions in video sequences}, booktitle = {International Conference on Acoustics, Speech and Signal Processing ICASSP{\textquoteright}01}, year = {2001}, month = {05/2001}, pages = {1565{\textendash}1568}, address = {Salt Lake City, USA}, author = {Ruiz-Hidalgo, J. and Salembier, P.} } @inbook {bSalembier00, title = {Region-based filtering of images and video sequences: a morphological viewpoint}, booktitle = {Nonlinear Image Processing}, year = {2000}, publisher = {Academic Press}, organization = {Academic Press}, edition = {S. Mitra and G. Sicuranza (Eds.)}, chapter = {9}, isbn = {0125004516}, author = {Salembier, P.} } @conference {cVilaplana99, title = {A region-based approach to face segmentation and tracking in video sequences}, booktitle = {VIII Reuni{\'o}n de Trabajo en Procesamiento de la Informaci{\'o}n y Control}, year = {1999}, pages = {345{\textendash}350}, isbn = {0-8186-8821-1}, author = {Ver{\'o}nica Vilaplana and Marqu{\'e}s, F.} } @article {aSalembier99, title = {Region-based representations of image and video : segmentation tools for multimedia services}, journal = {IEEE transactions on circuits and systems for video technology}, volume = {9}, number = {8}, year = {1999}, pages = {1147{\textendash}1169}, issn = {1051-8215}, author = {Salembier, P. and Marqu{\'e}s, F.} } @article {xRuiz-Hidalgo99, title = {The representation of images using scale trees}, year = {1999}, institution = {University of East Anglia}, type = {Master by Research}, abstract = {This thesis presents a new tree structure that codes the grey scale information of an\ image. Based on a scale-space processor called the sieve, a scale tree represents\ the image in a hierarchical manner in which nodes of the tree describe features of\ the image at a specific scales.

This representation can be used to perform different image processing operations. Filtering, segmentation or motion detection can be accomplished by parsing\ the tree using different attributes associated with the nodes

}, author = {Ruiz-Hidalgo, J.} } @conference {cGarrido99, title = {Representing and retrieving regions using binary partition trees}, booktitle = {1999 IEEE International Conference on Image Processing, ICIP 1999}, year = {1999}, address = {Kobe, Japan}, isbn = {0-7803-5470-2}, author = {Garrido, L. and Salembier, P. and Casas, J.} } @conference {cGarrido98, title = {Region-based analysis of video sequences with a general merging algorithm}, booktitle = {9th European Signal Processing Conference, EUSIPCO 1998}, year = {1998}, pages = {1693{\textendash}1696}, address = {Rhodes, Greece}, isbn = {960-7620-06-4}, author = {Garrido, L. and Salembier, P.} } @conference {cVilaplana98, title = {Region-based segmentation segmentation and tracking of human faces}, booktitle = {9th European Signal Processing Conference, EUSIPCO 1998}, year = {1998}, pages = {311{\textendash}314}, address = {Rhodes, Greece}, isbn = {960-7620-06-4}, author = {Ver{\'o}nica Vilaplana and Marqu{\'e}s, F. and Salembier, P. and Garrido, L.} } @conference {cRuiz-Hidalgo98a, title = {Robust morphological scale-space trees}, booktitle = {Noblesse Workshop on Non-Linear Model Based Image Analysis}, year = {1998}, month = {07/1998}, pages = {133{\textendash}139}, author = {Ruiz-Hidalgo, J. and Bangham, J. and Harvey, R.} } @article {aCasas97, title = {A region-based subband coding scheme}, journal = {Signal Processing: Image Communication}, volume = {10}, number = {1-2}, year = {1997}, month = {10/1997}, pages = {173{\textendash}200}, abstract = {This paper describes a region-based subband coding scheme intended for efficient representation of the visual information contained in image regions of arbitrary shape. QMF filters are separately applied inside each region for the analysis and synthesis stages, using a signal-adaptive symmetric extension technique at region borders. The frequency coefficients corresponding to each region are identified over the various subbands of the decomposition, so that the coding steps {\textemdash} namely, bit-allocation, quantization and entropy coding {\textemdash} can be performed independently for each region. Region-based subband coding exploits the possible homogeneity of the region contents by distributing the available bitrate not only in the frequency domain but also in the spatial domain, i.e. among the considered regions. The number of bits assigned to the subbands is optimized region by region for the whole image, by means of a rate-distortion optimization algorithm. Improved compression efficiency is obtained thanks to the local adaptativity of the bit allocation to the spectral contents of the different regions. This compensates for the overhead data spent in the coding of contour information. As the subband coefficients obtained for each region are coded as separate data units, the content-based functionalities required for the future MPEG4 video coding standard can be readily handled. For instance, content-based scalability is possible by simply imposing user-defined constraints to the bit-assignment in some regions.

}, keywords = {SCHEMA}, issn = {0923-5965}, doi = {10.1016/S0923-5965(97)00024-6}, author = {Casas, J. and Torres, L.} } @conference {cPardas97, title = {Relative depth estimation and segmentation in monocular sequences}, booktitle = {1997 PICTURE CODING SYMPOSIUM}, year = {1997}, pages = {367{\textendash}372}, isbn = {0 7923 7862 8}, author = {M. Pard{\`a}s} } @conference {cSalembier97a, title = {Robust motion estimation using connected operators}, booktitle = {IEEE International Conference on Image Processing, ICIP{\textquoteright}97}, year = {1997}, pages = {77{\textendash}80}, address = {Santa Barbara, USA}, isbn = {1522-4880}, author = {Salembier, P. and Sanson, H.} } @article {aSalembier95, title = {Region-based video coding using mathematical morphology}, journal = {Proceedings of the IEEE}, volume = {83}, number = {6}, year = {1995}, pages = {843{\textendash}857}, issn = {0018-9219}, author = {Salembier, P. and Torres, L. and Meyer, F. and Gu, C.} } @conference {cMarques94b, title = {Recursive image sequence segmentation by hierarchical models}, booktitle = {12th IAPR International Conference on Pattern Recognition}, year = {1994}, pages = {523{\textendash}525}, isbn = {0-8186-6265-4}, author = {Marqu{\'e}s, F. and Vera, V. and Gasull, A.} } @conference {cCasas94, title = {Residual image coding using mathematical morphology}, booktitle = {IEEE International Conference on Acoustics, Speech and Signal Processing}, year = {1994}, pages = {597{\textendash}600}, isbn = {0-7803-1775-0}, author = {Casas, J. and Torres, L.} }