\

\ This work proposes a novel end-to-end convolutional neural network (CNN) architecture to\ automatically quantify the severity of knee osteoarthritis (OA) using X-Ray images, which\ incorporates trainable attention modules acting as unsupervised fine-grained detectors of\ the region of interest (ROI). The proposed attention modules can be applied at different\ levels and scales across any CNN pipeline helping the network to learn relevant attention\ patterns over the most informative parts of the image at different resolutions. We test\ the proposed attention mechanism on existing state-of-the-art CNN architectures as our\ base models, achieving promising results on the benchmark knee OA datasets from the\ osteoarthritis initiative (OAI) and multicenter osteoarthritis study (MOST). All the codes\ from our experiments will be publicly available on the github repository: https://github.com/marc-gorriz/KneeOA-CNNAttention

This paper investigates modifying an existing neural network architecture for static saliency prediction using two types of recurrences that integrate information from the temporal domain. The first modification is the addition of a ConvLSTM within the architecture, while the second is a computationally simple exponential moving average of an internal convolutional state. We use weights pre-trained on the SALICON dataset and fine-tune our model on DHF1K. Our results show that both modifications achieve state-of-the-art results and produce similar saliency maps.

Evaluating image retrieval systems in a quantitative way, for example by computing measures like mean average precision, allows for objective comparisons with a ground-truth. However, in cases where ground-truth is not\ available, the only alternative is to collect feedback from a user. Thus, qualitative assessments become important to better understand how the system works. Visualizing the results could be, in some scenarios, the only way to evaluate the results obtained and also the only opportunity to identify that a system is failing. This necessitates developing a User Interface (UI) for a Content Based Image Retrieval (CBIR) system that allows visualization of results and improvement via capturing user relevance feedback. A well-designed UI facilitates understanding of the performance of the system, both in cases where it works well and perhaps more importantly those which highlight the need for improvement. Our open-source system implements three components to facilitate researchers to quickly develop these capabilities for their retrieval engine. We present: a web-based user interface to visualize retrieval results and collect user annotations; a server that simplifies\ connection with any underlying CBIR system; and a server that manages the search engine data.\

\

\

We introduce PathGAN, a deep neural network for visual scanpath prediction trained on adversarial examples. A visual scanpath is defined as the sequence of fixation points over an image defined by a human observer with its gaze. PathGAN is composed of two parts, the generator and the discriminator. Both parts extract features from images using off-the-shelf networks, and train recurrent layers to generate or discriminate scanpaths accordingly. In scanpath prediction, the stochastic nature of the data makes it very difficult to generate realistic predictions using supervised learning strategies, but we adopt adversarial training as a suitable alternative. Our experiments prove how PathGAN improves the state of the art of visual scanpath prediction on the Salient360! dataset.

This work obtained the\ 2nd award in Prediction of Head-gaze Scan-paths for Images, and the 2nd award in Prediction of Eye-gaze Scan-paths for Images at the IEEE ICME 2018 Salient360! Challenge.

This work explores attention models to weight the contribution of local convolutional representations for the instance search task. We present a retrieval framework based on bags of local convolutional features (BLCF) that benefits from saliency weighting to build an efficient image representation. The use of human visual attention models (saliency) allows significant improvements in retrieval performance without the need to conduct region analysis or spatial verification, and without requiring any feature fine tuning. We investigate the impact of different saliency models, finding that higher performance on saliency benchmarks does not necessarily equate to improved performance when used in instance search tasks. The proposed approach outperforms the state-of-the-art on the challenging INSTRE benchmark by a large margin, and provides similar performance on the Oxford and Paris benchmarks compared to more complex methods that use off-the-shelf representations.

We introduce deep neural networks for scanpath and saliency prediction trained on 360-degree images. The scanpath prediction model called SaltiNet is based on a temporal-aware novel representation of saliency information named the saliency volume. The first part of the network consists of a model trained to generate saliency volumes, whose parameters are fit by back-propagation using a binary cross entropy (BCE) loss over downsampled versions of the saliency volumes. Sampling strategies over these volumes are used to generate scanpaths over the 360-degree images. Our experiments show the advantages of using saliency volumes, and how they can be used for related tasks. We also show how a similar architecture achieves state-of-the-art performance for the related task of saliency map prediction. Our source code and trained models available here.

}, url = {https://www.sciencedirect.com/science/article/pii/S0923596518306209}, author = {Assens, Marc and McGuinness, Kevin and O{\textquoteright}Connor, N. and Xavier Gir{\'o}-i-Nieto} } @unpublished {xMohedanoa, title = {Fine-tuning of CNN models for Instance Search with Pseudo-Relevance Feedback}, year = {2017}, publisher = {NIPS 2017 Women in Machine Learning Workshop}, address = {Long Beach, CA, USA}, abstract = {CNN classification models trained on millions of labeled images have been proven to encode {\textquotedblleft}general purpose{\textquotedblright} descriptors in their intermediate layers. These descriptors are useful for a diverse range of computer vision problems~\cite{1}. However, the target task of these models is substantially different to the instance search task. While classification is concerned with distinguishing between different classes, instance search is concerned with identifying concrete instances of a particular class.\

\

In this work we propose an unsupervised approach to finetune a model for similarity learning~\cite{2}. For that, we combine two different search engines: one based on off-the-shelf CNN features, and another one on the popular SIFT features. As shown in the figure below, we observe that the information of pre-trained CNN representations and SIFT is in most of the cases complementary, which allows the generation of high quality rank lists. The fusion of the two rankings is used to generate training data for a particular dataset. A pseudo-relevance feedback strategy~\cite{3} is used for sampling images from rankings, considering the top images as positive examples of a particular instance and middle-low ranked images as negative examples.

}, author = {Mohedano, Eva and McGuinness, Kevin and Xavier Gir{\'o}-i-Nieto and O{\textquoteright}Connor, N.} } @inbook {bMohedano17, title = {Object Retrieval with Deep Convolutional Features}, booktitle = {Deep Learning for Image Processing Applications}, volume = {31}, number = {Advances in Parallel Computing}, year = {2017}, publisher = {IOS Press}, organization = {IOS Press}, address = {Amsterdam, The Netherlands}, abstract = {Image representations extracted from convolutional neural networks (CNNs) outdo hand-crafted features in several computer vision tasks, such as visual image retrieval. This chapter recommends a simple pipeline for encoding the local activations of a convolutional layer of a pretrained CNN utilizing the well-known Bag of Words (BoW) aggregation scheme and called bag of local convolutional features (BLCF). Matching each local array of activations in a convolutional layer to a visual word results in an assignment map, which is a compact representation relating regions of an image with a visual word. We use the assignment map for fast spatial reranking, finding object localizations that are used for query expansion. We show the suitability of the BoW representation based on local CNN features for image retrieval, attaining state-of-the-art performance on the Oxford and Paris buildings benchmarks. We demonstrate that the BLCF system outperforms the latest procedures using sum pooling for a subgroup of the challenging TRECVid INS benchmark according to the mean Average Precision (mAP) metric.

}, issn = {978-1-61499-822-8 }, doi = {10.3233/978-1-61499-822-8-137}, url = {http://ebooks.iospress.nl/volumearticle/48028}, author = {Mohedano, Eva and Amaia Salvador and McGuinness, Kevin and Xavier Gir{\'o}-i-Nieto and O{\textquoteright}Connor, N. and Marqu{\'e}s, F.} } @conference {cPana, title = {SalGAN: Visual Saliency Prediction with Generative Adversarial Networks}, booktitle = {CVPR 2017 Scene Understanding Workshop (SUNw)}, year = {2017}, address = {Honolulu, Hawaii, USA}, abstract = {We introduce SalGAN, a deep convolutional neural network for visual saliency prediction trained with adversarial examples. The first stage of the network consists of a generator model whose weights are learned by back-propagation computed from a binary cross entropy (BCE) loss over downsampled versions of the saliency maps. The resulting prediction is processed by a discriminator network trained to solve a binary classification task between the saliency maps generated by the generative stage and the ground truth ones. Our experiments show how adversarial training allows reaching state-of-the-art performance across different metrics when combined with a widely-used loss function like BCE.

}, url = {https://arxiv.org/abs/1701.01081}, author = {Pan, Junting and Cristian Canton-Ferrer and McGuinness, Kevin and O{\textquoteright}Connor, N. and Jordi Torres and Elisa Sayrol and Xavier Gir{\'o}-i-Nieto} } @conference {cAssens, title = {SaltiNet: Scan-path Prediction on 360 Degree Images using Saliency Volumes}, booktitle = {ICCV Workshop on Egocentric Perception, Interaction and Computing}, year = {2017}, month = {07/2017}, publisher = {IEEE}, organization = {IEEE}, address = {Venice, Italy}, abstract = {We introduce SaltiNet, a deep neural network for scanpath prediction trained on 360-degree images. The first part of the network consists of a model trained to generate saliency volumes, whose parameters are learned by back-propagation computed from a binary cross entropy (BCE) loss over downsampled versions of the saliency volumes. Sampling strategies over these volumes are used to generate scanpaths over the 360-degree images. Our experiments show the advantages of using saliency volumes, and how they can be used for related tasks.

Winner of three awards at the Salient 360 Challenge at IEEE ICME 2017 (Hong Kong): Best Scan Path, Best Student Scan-path and Audience Award.

\

Image representations extracted from convolutional neural networks (CNNs) have been shown to outperform hand-crafted features in multiple computer vision tasks, such as visual image retrieval. This work proposes a simple pipeline for encoding the local activations of a convolutional layer of a pre-trained CNN using the well-known bag of words aggregation scheme (BoW). Assigning each local array of activations in a convolutional layer to a visual word produces an \textit{assignment map}, a compact representation that relates regions of an image with a visual word. We use the assignment map for fast spatial reranking, obtaining object localizations that are used for query expansion. We demonstrate the suitability of the Bag of Words representation based on local CNN features for image retrieval, achieving state-of-the-art performance on the Oxford and Paris buildings benchmarks. We show that our proposed system for CNN feature aggregation with BoW outperforms state-of-the-art techniques using sum pooling at a subset of the challenging TRECVid INS benchmark.

Best poster award at ACM ICMR 2016

Overall acceptance rate in ICMR 2016: 30\%\

2016-05-Seminar-AmaiaSalvador-DeepVision from Image Processing Group on Vimeo.

\

DCU participated with a consortium of colleagues from NUIG and UPC in two tasks,\ INS and VTT. For the INS task we developed a framework consisting of face detection and\ representation and place detection and representation, with a user annotation of top-ranked\ videos. For the VTT task we ran 1,000 concept detectors from the VGG-16 deep CNN on\ 10 keyframes per video and submitted 4 runs for caption re-ranking, based on BM25, Fusion,\ Word2Vec and a fusion of baseline BM25 and Word2Vec. With the same pre-processing for\ caption generation we used an open source image-to-caption CNN-RNN toolkit NeuralTalk2\ to generate a caption for each keyframe and combine them.

}, url = {http://doras.dcu.ie/21484/}, author = {Marsden, Mark and Mohedano, Eva and McGuinness, Kevin and Calafell, Andrea and Xavier Gir{\'o}-i-Nieto and O{\textquoteright}Connor, N. and Zhou, Jiang and Azevedo, Lucas and Daubert, Tobias and Davis, Brian and H{\"u}rlimann, Manuela and Afli, Haithem and Du, Jinhua and Ganguly, Debasis and Li, Wei and Way, Andy and Smeaton, Alan F.} } @conference {cPan, title = {Shallow and Deep Convolutional Networks for Saliency Prediction}, booktitle = {IEEE Conference on Computer Vision and Pattern Recognition, CVPR}, year = {2016}, month = {06/2016}, publisher = {Computer Vision Foundation / IEEE}, organization = {Computer Vision Foundation / IEEE}, address = {Las Vegas, NV, USA}, abstract = {The prediction of salient areas in images has been traditionally addressed with hand-crafted features based on neuroscience principles. This paper, however, addresses the problem with a completely data-driven approach by training a convolutional neural network (convnet). The learning process is formulated as a minimization of a loss function that measures the Euclidean distance of the predicted saliency map with the provided ground truth. The recent publication of large datasets of saliency prediction has provided enough data to train end-to-end architectures that are both fast and accurate. Two designs are proposed: a shallow convnet trained from scratch, and a another deeper solution whose first three layers are adapted from another network trained for classification. To the authors knowledge, these are the first end-to-end CNNs trained and tested for the purpose of saliency prediction.

The interest of users in having their lives digitally recorded has grown in the last years thanks to the advances on wearable sensors.\ Wearable cameras are one of the most informative ones, but they generate large amounts of images that require automatic analysis to build useful applications upon them.\ In this work we explore the potential of these devices to find the last appearance of personal objects among the more than 2,000 images that are generated everyday.\ This application could help into developing personal assistants capable of helping users when they do not remember where they left their personal objects.\ We adapt a previous work on instance search to the specific domain of egocentric vision.

Extended abstract presented as poster in the 4th Workshop on Egocentric (First-Person) Vision,\ CVPR 2016.\

}, author = {Reyes, Cristian and Mohedano, Eva and McGuinness, Kevin and O{\textquoteright}Connor, N. and Xavier Gir{\'o}-i-Nieto} } @conference {cMohedano, title = {Exploring EEG for Object Detection and Retrieval}, booktitle = {ACM International Conference on Multimedia Retrieval (ICMR) }, year = {2015}, address = {Shanghai, China}, abstract = {This paper explores the potential for using Brain Computer Interfaces (BCI) as a relevance feedback mechanism in content-based image retrieval. We investigate if it is possible to capture useful EEG signals to detect if relevant objects are present in a dataset of realistic and complex images. \ We perform several experiments using a rapid serial visual presentation (RSVP) of images at different rates (5Hz and 10Hz) on 8 users with different degrees of familiarization with BCI and the dataset. We then use the feedback from the BCI and mouse-based interfaces to retrieve objects in a subset of TRECVid images. We show that it is indeed possible detect such objects in complex images and, also, that users with previous knowledge on the dataset or experience with the RSVP outperform others. When the users have limited time to annotate the images (100 seconds in our experiments) both interfaces are comparable in performance. Comparing our best users in a retrieval task, we found that EEG-based relevance feedback outperforms mouse-based feedback. The realistic and complex image dataset differentiates our work from previous studies on EEG for image retrieval.\

[Extended version in arXiv:1504.02356]

Overall acceptance rate: 33\% (source)

This paper explores the potential of brain-computer interfaces in segmenting objects from images. Our approach is centered around designing an effective method for displaying the image parts to the users such that they generate measurable brain reactions. When a block of pixels is displayed, we estimate the probability of that block containing the object of interest using a score based on EEG activity. After several such blocks are displayed in rapid visual serial presentation, the resulting probability map is binarized and combined with the GrabCut algorithm to segment the image into object and background regions. This study extends our previous work that showed how BCI and simple EEG analysis are useful in locating object boundaries in images

}, issn = {1573-7721}, doi = {10.1007/s11042-015-2805-0}, url = {http://dx.doi.org/10.1007/s11042-015-2805-0}, author = {Mohedano, Eva and Healy, Graham and Kevin McGuinness and Xavier Gir{\'o}-i-Nieto and O{\textquoteright}Connor, N. and Smeaton, Alan F.} } @conference {cVentura, title = {Improving Spatial Codification in Semantic Segmentation}, booktitle = {IEEE International Conference on Image Processing (ICIP), 2015}, year = {2015}, month = {09/2015}, publisher = {IEEE}, organization = {IEEE}, address = {Quebec City}, abstract = {This paper explores novel approaches for improving the spatial codification for the pooling of local descriptors to solve the semantic segmentation problem. We propose to partition the image into three regions for each object to be described: Figure, Border and Ground. This partition aims at minimizing the influence of the image context on the object description and vice versa by introducing an intermediate zone around the object contour. Furthermore, we also propose a richer visual descriptor of the object by applying a Spatial Pyramid over the Figure region. Two novel Spatial Pyramid configurations are explored: Cartesian-based and crown-based Spatial Pyramids. We test these approaches with state-of-the-art techniques and show that they improve the Figure-Ground based pooling in the Pascal VOC 2011 and 2012 semantic segmentation challenges.

Insight-DCU participated in the instance search (INS), semantic indexing (SIN), and localization tasks (LOC) this year.

In the INS task we used deep convolutional network features trained on external data and the query data for this year to train our system. We submitted four runs, three based on convolutional network features, and one based on SIFT/BoW. F A insightdcu 1 was an automatic run using features from the last convolutional layer of a deep network with bag-of-words encoding and achieved 0.123 mAP. F A insightdcu 2 modi ed the previous run to use re-ranking based on an R-CNN model and achieved 0.111 mAP. I A insightdcu 3, our interactive run, achieved 0.269 mAP. Our SIFT-based run F A insightdcu 2 used weak geometric consistency to improve performance over the previous year to 0.187 mAP. Overall we found that using features from the convolutional layers improved performance over features from the fully connected layers used in previous years, and that weak geometric consistency improves performance for local feature ranking.

In the SIN task we again used convolutional network features, this time netuning a network pretrained on external data for the task. We submitted four runs, 2C D A insightdcu.15 1..4 varying the top-level learning algorithm and use of concept co-occurance. 2C D A insightdcu.15 1 used a linear SVM top-level learner, and achieved 0.63 mAP. Exploiting concept co-occurance improved the accuracy of our logistic regression run 2C D A insightdcu.15 3 from 0.058 mAP to 0.6 2C D A insightdcu.15 3.

Our LOC system used training data from IACC.1.B and features similar to our INS run, but using a VLAD encoding instead of a bag-of-words. Unfortunately there was problem with the run that we are still investigating.

Note: UPC and NII participated only in the INS task of this submission.

}, url = {http://www-nlpir.nist.gov/projects/tvpubs/tv.pubs.15.org.html}, author = {Kevin McGuinness and Mohedano, Eva and Amaia Salvador and Zhang, ZhenXing and Marsden, Mark and Wang, Peng and Jargalsaikhan, Iveel and Antony, Joseph and Xavier Gir{\'o}-i-Nieto and Satoh, Shin{\textquoteright}ichi and O{\textquoteright}Connor, N. and Smeaton, Alan F.} } @mastersthesis {xPorta, title = {Rapid Serial Visual Presentation for Relevance Feedback in Image Retrieval with EEG Signals}, year = {2015}, abstract = {Studies: Bachelor degree in Science and Telecommunication Technologies Engineering at\ Telecom BCN-ETSETB\ from the Technical University of Catalonia (UPC)

Grade: A (9/10)

This thesis explores the potential of relevance feedback for image retrieval using EEG signals for human-computer interaction. This project aims at studying the optimal parameters of a rapid serial visual presentation (RSVP) of frames from a video database when the user is searching for an object instance. The simulations reported in this thesis assess the trade-off between using a small or a large amount of images in each RSVP round that captures the user feedback. While short RSVP rounds allow a quick learning of the user intention from the system, RSVP rounds must also be long enough to let users generate the P300 EEG signals which are triggered by relevant images. This work also addresses the problem of how to distribute potential relevant and non-relevant images in a RSVP round to maximize the probabilities of displaying each relevant frame separated at least 1 second from another relevant frame, as this configuration generates a cleaner P300 EEG signal. The presented simulations are based on a realistic set up for video retrieval with a subset of 1,000 frames from the TRECVID 2014 Instance Search task.

}, keywords = {eeg, feedback, image, relevance, retrieval}, author = {Porta, Sergi}, editor = {Amaia Salvador and Mohedano, Eva and Xavier Gir{\'o}-i-Nieto and O{\textquoteright}Connor, N.} } @conference {cMcGuinness, title = {Insight Centre for Data Analytics (DCU) at TRECVid 2014: Instance Search and Semantic Indexing Tasks}, booktitle = {2014 TRECVID Workshop}, year = {2014}, month = {11/2014}, publisher = {National Institute of Standards and Technology (NIST)}, organization = {National Institute of Standards and Technology (NIST)}, address = {Orlando, Florida (USA)}, abstract = {Insight-DCU participated in the instance search (INS) and semantic indexing (SIN) tasks in 2014. Two very different approaches were submitted for instance search, one based on features extracted using pre-trained deep convolutional neural networks (CNNs), and another based on local SIFT features, large vocabulary visual bag-of-words aggregation, inverted index-based lookup, and geometric verification on the top-N retrieved results. Two interactive runs and two automatic runs were submitted, the best interactive runs achieved a mAP of 0.135 and the best automatic 0.12. Our semantic indexing runs were based also on using convolutional neural network features, and on Support Vector Machine classifiers with linear and RBF kernels. One run was submitted to the main task, two to the no annotation task, and one to the progress task. Data for the no-annotation task was gathered from Google Images and ImageNet. The main task run has achieved a mAP of 0.086, the best no-annotation runs had a close performance to the main run by achieving a mAP of 0.080, while the progress run had 0.043.

[2014 TREC Video Retrieval Evaluation Notebook Papers and Slides]

\

\

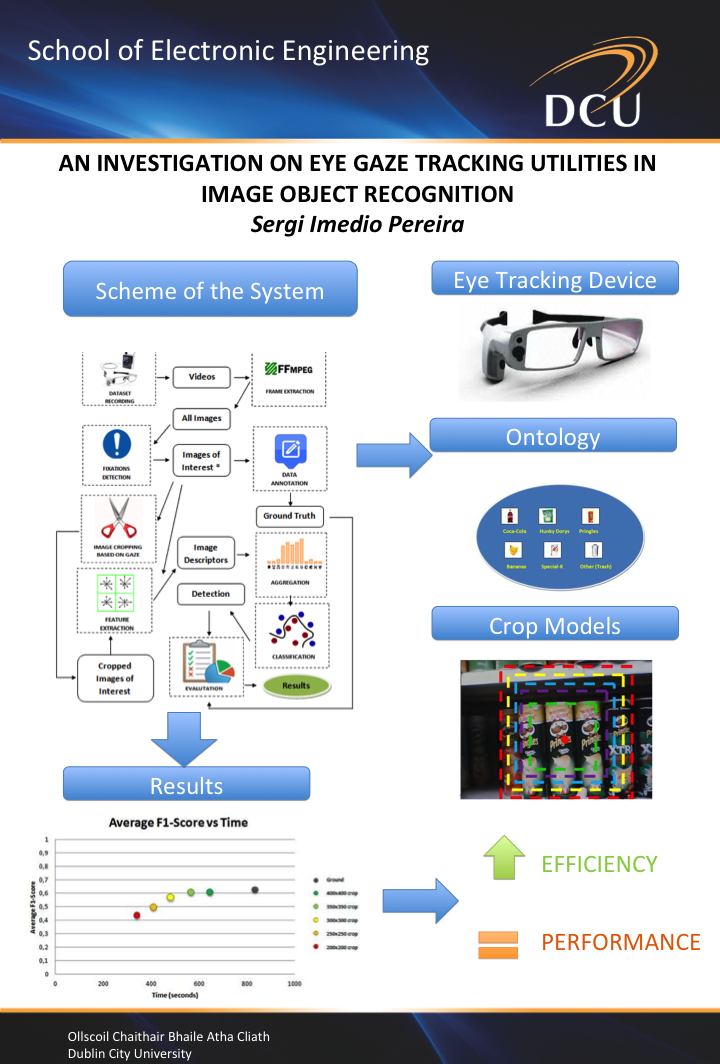

Computer vision has been one of the most revolutionary technologies of the last few decades. This project investigates how to improve an image recognition system (image classifier) using a not very exploded technology; eye gaze tracking. The aim of this project is to explore the benefits that this technology can bring to an image classifier. The experiment that is set in this project is to build a dataset with an eye tracking device and (using different sized cropped parts of the image based on the eye tracking data) see how the performance of an image classifier is affected with these images. The results are interesting. Since smaller images have to be processed by using this method, the system is more efficient. Regarding the performance, it is very similar to the one obtained without using any eye tracking data, so it is arguable to state that it presents an improvement, and opens new directions of investigation for future works.

\

\

\

This paper explores the potential of brain-computer interfaces in segmenting objects from images. Our approach is centered around designing an effective method for displaying the image parts to the users such that they generate measurable brain reactions. When an image region, specifically a block of pixels, is displayed we estimate the probability of the block containing the object of interest using a score based on EEG activity. After several such blocks are displayed, the resulting probability map is binarized and combined with the GrabCut algorithm to segment the image into object and background regions. This study shows that BCI and simple EEG analysis are useful in locating object boundaries in images.

}, keywords = {Brain-computer interfaces, Electroencephalography, GrabCut algorithm, Interactive segmentation, Object segmentation, rapid serial visual presentation}, doi = {10.1145/2647868.2654896}, url = {http://arxiv.org/abs/1408.4363}, author = {Mohedano, Eva and Healy, Graham and Kevin McGuinness and Xavier Gir{\'o}-i-Nieto and O{\textquoteright}Connor, N. and Smeaton, Alan F.} } @mastersthesis {xMohedano13, title = {Investigating EEG for Saliency and Segmentation Applications in Image Processing}, year = {2013}, abstract = {Advisors: Kevin McGuinness, Xavier Gir{\'o}-i-Nieto, Noel O{\textquoteright}Connor

School: Dublin City University (Ireland)

The main objective of this project is to implement a new way to compute saliency maps and to locate an object in an image by using a brain-computer interface. To achieve this, the project is centered in designing the proper way to display the different parts of the images to the users in such a way that they generate measurable reactions. Once an image window is shown, the objective is to compute a score based on the EEG activity and compare its result with the current automatic methods to estimate saliency maps. Also, the aim of this work is to use the EEG map as a seed for another segmentation algorithm that will extract the object from the background in an image. This study provides evidence that BCI are useful to find the location of the objects in a simple images via straightforward EEG analysis and this represents the starting point to locate objects in more complex images.

Related post on BitSearch.

\

The aim of the SCHEMA Network of Excellence is to bring together a critical mass of universities, research centers, industrial partners and end users, in order to design a reference system for content-based semantic scene analysis, interpretation and understanding. Relevant research areas include: contentbased multimedia analysis and automatic annotation of semantic multimedia content, combined textual and multimedia information retrieval, semantic-web, MPEG-7 and MPEG-21 standards, user interfaces and human factors. In this paper, recent advances in content-based analysis, indexing and retrieval of digital media within the SCHEMA Network are presented. These advances will

be integrated in the SCHEMA module-based, expandable reference system.

In this paper we present the Qimera segmentation platform and describe the different approaches to segmentation that have been implemented in the system to date. Analysis techniques have been implemented for both region-based and object-based segmentation. The region-based segmentation algorithms include: a colour segmentation algorithm based on a modified Recursive Shortest Spanning Tree (RSST) approach, an implementation of a colour image segmentation algorithm based on the K-Means-with-Connectivity-Constraint (KMCC) algorithm and an approach based on the Expectation Maximization (EM) algorithm applied in a 6D colour/texture space. A semi-automatic approach to object segmentation that uses the modified RSST approach is outlined. An automatic object segmentation approach via snake propagation within a level-set framework is also described. Illustrative segmentation results are presented in all cases. Plans for future research within the Qimera project are also discussed.

}, isbn = {978-84-612-2373-2}, author = {O{\textquoteright}Connor, N. and Sav, S. and Adamek, T. and Mezaris, V. and Kompatsiaris, I. and Lui, T. and Izquierdo, E. and Ferran, C. and Casas, J.} } @article {aSalembier00a, title = {Description Schemes for Video Programs, Users and Devices}, journal = {Signal processing: image communication}, volume = {16}, number = {1}, year = {2000}, pages = {211{\textendash}234}, issn = {0923-5965}, author = {Salembier, P. and Richard, Q. and O{\textquoteright}Connor, N. and Correia, P. and Sezan, I and van Beek, P} } @conference {cSalembier99, title = {The DICEMAN description schemes for still images and video sequences}, booktitle = {Workshop on Image Analysis for Multimedia Application Services, WIAMIS{\textquoteright}99}, year = {1999}, pages = {25{\textendash}34}, address = {Berlin, Germany}, isbn = {84-7653-885-5}, author = {Salembier, P. and O{\textquoteright}Connor, N. and Correa, P. and Ward, L} } @conference {cSalembier99a, title = {Hierarchical visual description schemes for still images and video sequences}, booktitle = {1999 IEEE International Conference on Image Processing, ICIP 1999}, year = {1999}, address = {Kobe, Japan}, author = {Salembier, P. and O{\textquoteright}Connor, N. and Correia, P. and Pereira, F.} }