A fully automatic technique for segmenting the liver and localizing its unhealthy tissues is a convenient tool in order to diagnose hepatic diseases and assess the response to the according treatments. In this work we propose a method to segment the liver and its lesions from Computed Tomography (CT) scans using Convolutional Neural Networks (CNNs), that have proven good results in a variety of computer vision tasks, including medical imaging. The network that segments the lesions consists of a cascaded architecture, which first focuses on the region of the liver in order to segment the lesions on it. Moreover, we train a detector to localize the lesions, and mask the results of the segmentation network with the positive detections. The segmentation architecture is based on DRIU, a Fully Convolutional Network (FCN) with side outputs that work on feature maps of different resolutions, to finally\ benefit from the multi-scale information learned by different stages of the network. The main contribution of this work is the use of a detector to localize the lesions, which we show to be beneficial to remove false positives triggered by the segmentation network.

\

Program:\ Master{\textquoteright}s Degree in Telecommunications Engineering

Grade: A with honours (10.0/10.0)

A fully automatic technique for segmenting the liver and localizing its unhealthy tissues is a convenient tool in order to diagnose hepatic diseases and also to assess the response to the according treatments. In this thesis we propose a method to segment the liver and its lesions from Computed Tomography (CT) scans, as well as other anatomical structures and organs of the human body. We have used Convolutional Neural Networks (CNNs), that have proven good results in a variety of tasks, including medical imaging. The network to segment the lesions consists of a cascaded architecture, which first focuses on the liver region in order to segment the lesion. Moreover, we train a detector to localize the lesions and just keep those pixels from the output of the segmentation network where a lesion is detected. The segmentation architecture is based on DRIU [24], a Fully Convolutional Network (FCN) with side outputs that work at feature maps of different resolutions, to finally benefit from the multi-scale information learned by different stages of the network. Our pipeline is 2.5D, as the input of the network is a stack of consecutive slices of the CT scans. We also study different methods to benefit from the liver segmentation in order to delineate the lesion. The main focus of this work is to use the detector to localize the lesions, as we demonstrate that it helps to remove false positives triggered by the segmentation network. The benefits of using a detector on top of the segmentation is that the detector acquires a more global insight of the healthiness of a liver tissue compared to the segmentation network, whose final output is pixel-wise and is not forced to take a global decision over a whole liver patch. We show experiments with the LiTS dataset for the lesion and liver segmentation. In order to prove the generality of the segmentation network, we also segment several anatomical structures from the Visceral dataset.

We propose a unified approach for bottom-up hierarchical image segmentation and object proposal generation for recognition, called Multiscale Combinatorial Grouping (MCG). For this purpose, we first develop a fast normalized cuts algorithm. We then propose a high-performance hierarchical segmenter that makes effective use of multiscale information. Finally, we propose a grouping strategy that combines our multiscale regions into highly-accurate object proposals by exploring efficiently their combinatorial space. We also present Single-scale Combinatorial Grouping (SCG), a faster version of MCG that produces competitive proposals in under five second per image. We conduct an extensive and comprehensive empirical validation on the BSDS500, SegVOC12, SBD, and COCO datasets, showing that MCG produces state-of-the-art contours, hierarchical regions, and object proposals.

}, url = {http://arxiv.org/abs/1503.00848v1}, author = {Jordi Pont-Tuset and Pablo Arbelaez and Jonathan T. Barron and Marqu{\'e}s, F. and Jitendra Malik} } @article {Pont-Tuset2015c, title = {Supervised Evaluation of Image Segmentation and Object Proposal Techniques}, journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI)}, volume = {38}, year = {2016}, pages = {1465 - 1478}, author = {Jordi Pont-Tuset and Marqu{\'e}s, F.} } @article {aGiro-i-Nieto13, title = {From Global Image Annotation to Interactive Object Segmentation}, journal = {Multimedia Tools and Applications}, volume = {70}, year = {2014}, month = {05/2014}, chapter = {475}, abstract = {This paper presents a graphical environment for the annotation of still images that works both at the global and local scales. At the global scale, each image can be tagged with positive, negative and neutral labels referred to a semantic class from an ontology. These annotations can be used to train and evaluate an image classifier. A finer annotation at a local scale is also available for interactive segmentation of objects. This process is formulated as a selection of regions from a precomputed hierarchical partition called Binary Partition Tree. Three different semi-supervised methods have been presented and evaluated: bounding boxes, scribbles and hierarchical navigation. The implemented Java source code is published under a free software license.

}, keywords = {annotation, Hierarchical, Interaction, Multiscale, segmentation}, doi = {10.1007/s11042-013-1374-3}, author = {Xavier Gir{\'o}-i-Nieto and Martos, Manel and Mohedano, Eva and Jordi Pont-Tuset} } @phdthesis {dPont-Tuset14, title = {Image Segmentation Evaluation and Its Application to Object Detection}, year = {2014}, month = {0/2014}, school = {Universitat Polit{\`e}cnica de Catalunya, BarcelonaTech}, address = {Barcelona}, abstract = {The first two parts of this Thesis are focused on the study of the supervised evaluation of image segmentation algorithms. Supervised in the sense that the segmentation results are compared to a human-made annotation, known as ground truth, by means of different measures of similarity. The evaluation depends, therefore, on three main points.

First, the image segmentation techniques we evaluate. We review the state of the art in image segmentation, making an explicit difference between those techniques that provide a flat output, that is, a single clustering of the set of pixels into regions; and those that produce a hierarchical segmentation, that is, a tree-like structure that represents regions at different scales from the details to the whole image.

Second, ground-truth databases are of paramount importance in the evaluation. They can be divided into those annotated only at object level, that is, with marked sets of pixels that refer to objects that do not cover the whole image; or those with annotated full partitions, which provide a full clustering of all pixels in an image. Depending on the type of database, we say that the analysis is done from an object perspective or from a partition perspective.

Finally, the similarity measures used to compare the generated results to the ground truth are what will provide us with a quantitative tool to evaluate whether our results are good, and in which way they can be improved. The main contributions of the first parts of the thesis are in the field of the similarity measures.

First of all, from an object perspective, we review the existing measures to compare two object representations and show that some of them are equivalent. In order to evaluate full partitions and hierarchies against an object, one needs to select which of their regions form the object to be assessed. We review and improve these techniques by means of a mathematical model of the problem. This analysis allows us to show that hierarchies can represent objects much better with much less number of regions than flat partitions.

From a partition perspective, the literature about evaluation measures is large and entangled. Our first contribution is to review, structure, and deduplicate the measures available. We pro- vide a new measure that improves previous ones in terms of a set of qualitative and quantitative meta-measures. We also extend the measures on flat partitions to cover hierarchical segmentations.

The third part of this Thesis moves from the evaluation of image segmentation to its application to object detection. In particular, we build on some of the conclusions extracted in the first part to generate segmented object candidates. Given a set of hierarchies, we build the pairs and triplets of regions, we learn to combine the set from each hierarchy, and we rank them using low-level and mid-level cues. We conduct an extensive experimental validation that show that our method outperforms the state of the art in terms of object segmentation quality and object detection accuracy.

}, url = {http://hdl.handle.net/10803/134354}, author = {Jordi Pont-Tuset}, editor = {Marqu{\'e}s, F.} } @conference {cArbelaez14, title = {Multiscale Combinatorial Grouping}, booktitle = {Computer Vision and Pattern Recognition (CVPR)}, year = {2014}, abstract = {We propose a unified approach for bottom-up hierarchical image segmentation and object candidate generation for recognition, called Multiscale Combinatorial Grouping (MCG). For this purpose, we first develop a fast normalized cuts algorithm. We then propose a high-performance hierarchical segmenter that makes effective use of multiscale information. Finally, we propose a grouping strategy that combines our multiscale regions into highly-accurate object candidates by efficiently exploring their combinatorial space. We conduct extensive experiments on both the BSDS500 and on the PASCAL 2012 segmentation datasets, showing that MCG produces state-of-the-art contours, hierarchical regions, and object candidates.

\ Code and pre-computed results available here.

}, author = {Pablo Arbelaez and Jordi Pont-Tuset and Barron, Jon and Marqu{\'e}s, F. and Jitendra Malik} } @conference {cPont-Tuset13, title = {Measures and Meta-Measures for the Supervised Evaluation of Image Segmentation}, booktitle = {Computer Vision and Pattern Recognition (CVPR)}, year = {2013}, month = {06/2013}, abstract = {This paper tackles the supervised evaluation of image segmentation algorithms. First, it surveys and structures the measures used to compare the segmentation results with a ground truth database; and proposes a new measure: the precision-recall for objects and parts. To compare the goodness of these measures, it defines three quantitative meta-measures involving six state of the art segmentation methods. The meta-measures consist in assuming some plausible hypotheses about the results and assessing how well each measure reflects these hypotheses. As a conclusion, this paper proposes the precision-recall curves for boundaries and for objects-and-parts as the tool of choice for the supervised evaluation of image segmentation. We make the datasets and code of all the measures publicly available.

\

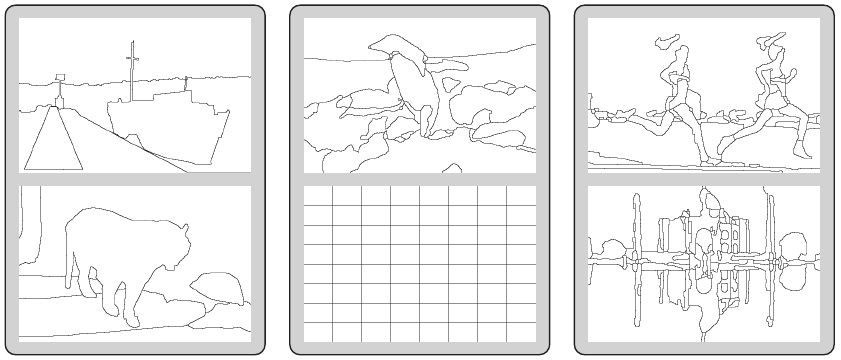

Examples of the meta-measure principles: How good are\ the evaluation measures at distinguishing these pairs of partitions?\

\

}, author = {Jordi Pont-Tuset and Marqu{\'e}s, F.} } @conference {cPont-Tuset12, title = {Supervised Assessment of Segmentation Hierarchies}, booktitle = {European Conference on Computer Vision (ECCV)}, year = {2012}, month = {01/2012}, abstract = {This paper addresses the problem of the supervised assessment of hierarchical region-based image representations. Given the large amount of partitions represented in such structures, the supervised assessment approaches in\ the literature are based on selecting a reduced set of representative partitions and\ evaluating their quality. Assessment results, therefore, depend on the partition selection strategy used. Instead, we propose to find the partition in the tree that best\ matches the ground-truth partition, that is, the upper-bound partition selection.

We show that different partition selection algorithms can lead to different conclusions regarding the quality of the assessed trees and that the upper-bound partition\ selection provides the following advantages: 1) it does not limit the assessment\ to a reduced set of partitions, and 2) it better discriminates the random trees from\ actual ones, which reflects a better qualitative behavior. We model the problem as\ a Linear Fractional Combinatorial Optimization (LFCO) problem, which makes\ the upper-bound selection feasible and efficient.

}, doi = {10.1007/978-3-642-33765-9_58}, author = {Jordi Pont-Tuset and Marqu{\'e}s, F.} } @conference {cPont-Tuset12a, title = {Upper-bound assessment of the spatial accuracy of hierarchical region-based image representations}, booktitle = {IEEE International Conference on Acoustics, Speech, and Signal Processing}, year = {2012}, month = {03/2012}, pages = {865-868}, abstract = {Hierarchical region-based image representations are versatile tools for segmentation, filtering, object detection, etc.\ The evaluation of their spatial accuracy has been usually performed assessing the final result of an algorithm based on\ this representation.\ Given its wide applicability, however, a direct supervised assessment, independent of any application, would be desirable and fair.

A brute-force assessment of all the partitions represented in the hierarchical structure would be a correct approach,\ but as we prove formally, it is computationally unfeasible.\ This paper presents an efficient algorithm to find the upper-bound performance of the representation and we show\ that the previous approximations in the literature can fail at finding this bound.

}, isbn = {978-1-4673-0044-5}, doi = {10.1109/ICASSP.2012.6288021}, author = {Jordi Pont-Tuset and Marqu{\'e}s, F.} } @conference {cPont-Tuset10, title = {Contour detection using binary partition trees}, booktitle = {IEEE International Conference on Image Processing}, year = {2010}, pages = {1609{\textendash}1612}, isbn = {?}, doi = {10.1109/ICIP.2010.5652339}, url = {http://ieeexplore.ieee.org/search/srchabstract.jsp?tp=\&arnumber=5652339\&queryText\%3DContour+detection+using+binary+partition+trees\%26openedRefinements\%3D*\%26searchField\%3DSearch+All}, author = {Jordi Pont-Tuset and Marqu{\'e}s, F.} } @mastersthesis {xVentura10, title = {Image-Based Query by Example Using MPEG-7 Visual Descriptors}, year = {2010}, abstract = {This project presents the design and implementation of a Content-Based Image Re- trieval (CBIR) system where queries are formulated by visual examples through a graphical interface. Visual descriptors and similarity measures implemented in this work followed mainly those defined in the MPEG-7 standard although, when necessary, extensions are proposed. Despite the fact that this is an image-based system, all the proposed descriptors have been implemented for both image and region queries, allowing the future system upgrade to support region-based queries. This way, even a contour shape descriptor has been developed, which has no sense for the whole image. The system has been assessed on different benchmark databases; namely, MPEG-7 Common Color Dataset, and Corel Dataset. The evaluation has been performed for isolated descriptors as well as for combinations of them. The strategy studied in this work to gather the information obtained from the whole set of computed descriptors is weighting the rank list for each isolated descriptor.\

\

}, url = {http://upcommons.upc.edu/pfc/handle/2099.1/9453}, author = {Ventura, C.}, editor = {Marqu{\'e}s, F. and Jordi Pont-Tuset} } @conference {cGiro10a, title = {System architecture of a web service for Content-Based Image Retrieval}, booktitle = {ACM International Conference On Image And Video Retrieval 2010}, year = {2010}, pages = {358{\textendash}365}, abstract = {This paper presents the system architecture of a Content-Based Image Retrieval system implemented as a web service. The proposed solution is composed of two parts, a client running a graphical user interface for query formulation and a server where the search engine explores an image repository. The separation of the user interface and the search engine follows a Service as a Software (SaaS) model, a type of cloud computing design where a single core system is online and available to authorized clients. The proposed architecture follows the REST software architecture and HTTP protocol for communications, two solutions that combined with metadata coded in RDF, make the proposed system ready for its integration in the semantic web. User queries are formulated by visual examples through a graphical interface and content is remotely accessed also through HTTP communication. Visual descriptors and similarity measures implemented in this work are mostly defined in the MPEG-7 standard, while textual metadata is coded according to the Dublin Core specifications.

}, isbn = {978-1-4503-0117-6}, doi = {10.1145/1816041.1816093}, url = {http://doi.acm.org/10.1145/1816041.1816093}, author = {Xavier Gir{\'o}-i-Nieto and Ventura, C. and Jordi Pont-Tuset and Cort{\'e}s, S. and Marqu{\'e}s, F.} }